Abstract

Many national, regional and local governments, as well as other

organisations in- and outside of the public sector, collect numeric

data and aggregate this data into statistics. There is a need to

publish these statistics in a standardised, machine-readable way on

the web, so that they can be freely integrated and reused in consuming

applications.

In this document, the W3C Government Linked Data

Working Group presents use cases and requirements supporting a

recommendation of the RDF Data Cube Vocabulary [QB-2013]. The group obtained use cases from

existing deployments of and experiences with an earlier version of the

data cube vocabulary [QB-2010].

The group also describes a set of requirements derived from the use

cases and to be considered in the recommendation.

Status of This Document

This section describes the status of this document at the

time of its publication. Other documents may supersede this document.

A list of current W3C

publications and the latest revision of this technical report can be

found in the W3C technical reports index

at http://www.w3.org/TR/.

This document is an editorial update to an Editor's Draft of the "Use

Cases and Requirements for the Data Cube Vocabulary" developed by the

W3C Government Linked Data

Working Group.

This document was published by the Government Linked Data Working

Group as a Working Group Note. If you wish to make comments regarding

this document, please send them to public-gld-comments@w3.org

(subscribe,

archives).

All comments are welcome.

Publication as a Working Group Note does not imply endorsement by the

W3C Membership. This is

a draft document and may be updated, replaced or obsoleted by other

documents at any time. It is inappropriate to cite this document as

other than work in progress.

This document was produced by a group operating under the 5

February 2004 W3C

Patent Policy

. W3C maintains a public list of any patent disclosures

made in connection with the deliverables of the group; that page also

includes instructions for disclosing a patent. An individual who has

actual knowledge of a patent which the individual believes contains Essential

Claim(s) must disclose the information in accordance with section

6 of the W3C Patent

Policy

.

Table of Contents

1. Introduction

The aim of this document is to present concrete use cases and

requirements for a vocabulary to publish statistics as Linked Data. An

earlier version of the data cube vocabulary [

QB-2010] has been existing for

some time and has proven applicable in

several

deployments. The

W3C Government Linked Data

Working Group intends to transform the data cube vocabulary into a

W3C recommendation of

the RDF Data Cube Vocabulary [

QB-2013]. This document

describes use cases and requirements derived from existing data cube

deployments in order to document and illustrate design decisions that

have driven the work.

The rest of this document is structured as follows. We will

first give a short introduction of the specificities of modelling

statistics. Then, we will describe use cases that have been derived

from existing deployments or feedback to the earlier data cube

vocabulary version. In particular, we describe possible benefits and

challenges of use cases. Afterwards, we will describe concrete

requirements that were derived from those use cases and that have been

taken into account for the specification.

We use the name data cube vocabulary throughout the document

when referring to the vocabulary.

1.1 Describing statistics

In the following, we describe the challenge of an RDF vocabulary

for publishing statistics as Linked Data.

Describing statistics - collected and aggregated numeric data -

is challenging for the following reasons:

- Representing statistics requires more complex modeling as

discussed by Martin Fowler [FOWLER97]:

Recording a statistic simply as an attribute to an object (e.g., the

fact that a person weighs 185 pounds) fails with representing

important concepts such as quantity, measurement, and unit. Instead,

a statistic is modeled as a distinguishable object, an observation.

- The object describes an observation of a value, e.g., a

numeric value (e.g., 185) in case of a measurement or a categorical

value (e.g., "blood group A") in case of a categorical observation.

- To allow correct interpretation of the value, the object can

be further described by "dimensions", e.g., the specific phenomenon

"weight" observed and the unit "pounds". Given background

information, e.g., arithmetical and comparative operations, humans

and machines can appropriately visualize such observations or have

conversions between different quantities.

- Also, an observation separates a value from the actual event

at which it was collected; for instance, one can describe the

"Person" that collected the observation and the "Time" the

observation was collected.

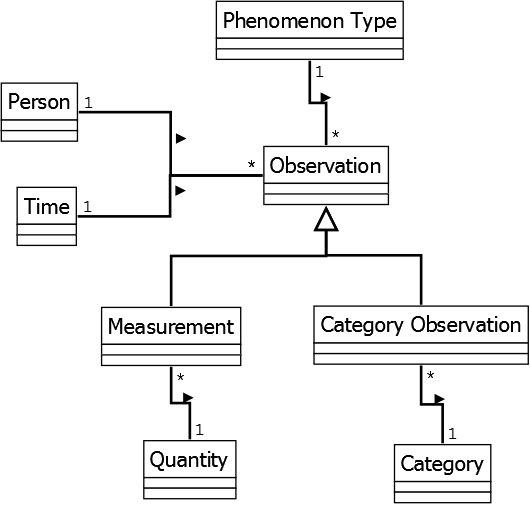

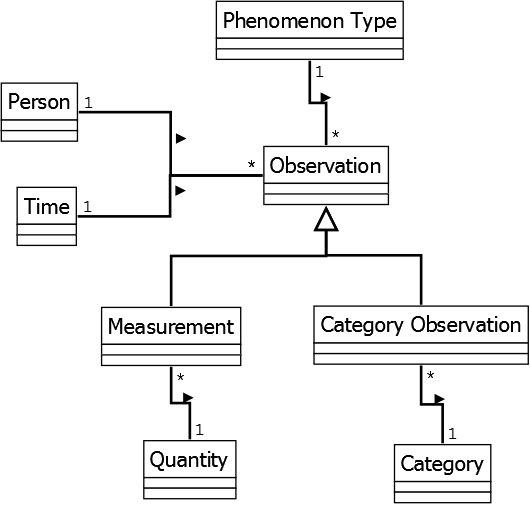

The following figure illustrates this specificitiy of modelling in a

class diagram:

Figure: Illustration of specificities in

modelling of a statistic

The Statistical Data and Metadata eXchange [SDMX] - the ISO standard for exchanging and

sharing of statistical data and metadata among organisations - uses

"multidimensional model" that caters for the specificity of modelling

statistics. It allows to describe statistics as observations.

Observations exhibit values (Measures) that depend on dimensions

(Members of Dimensions).

Since the SDMX standard has proven applicable in many contexts,

the vocabulary adopts the multidimensional model that underlies SDMX

and will be compatible to SDMX.

2. Terminology

Statistics

is the study of

the collection, organisation, analysis, and interpretation of data.

Statistics comprise statistical data.

The basic structure of

statistical data

is a multidimensional table (also called a data cube) [SDMX], i.e., a set of observed values organized

along a group of dimensions, together with associated metadata. If

aggregated we refer to statistical data as "macro-data" whereas if

not, we refer to "micro-data".

Statistical data can be collected in a

dataset

, typically published and maintained by an organisation [SDMX]. The dataset contains metadata, e.g.,

about the time of collection and publication or about the maintaining

and publishing organisation.

Source data

is data from datastores such as RDBs or spreadsheets that acts as a

source for the Linked Data publishing process.

Metadata

about statistics defines the data structure and give contextual

information about the statistics.

A format is

machine-readable

if it is amenable to automated processing by a machine, as opposed to

presentation to a human user.

A

publisher

is a person or organisation that exposes source data as Linked Data on

the Web.

A

consumer

is a person or agent that uses Linked Data from the Web.

A

registry

collects metadata about statistical data in a registration fashion.

3. Use cases

This section presents scenarios that are enabled by the

existence of a standard vocabulary for the representation of

statistics as Linked Data.

3.1 SDMX Web Dissemination Use Case

(Use case taken from SDMX Web Dissemination

Use Case [SDMX 2.1])

Since we have adopted the multidimensional model that underlies

SDMX, we also adopt the "Web Dissemination Use Case" which is the

prime use case for SDMX since it is an increasing popular use of SDMX

and enables organisations to build a self-updating dissemination

system.

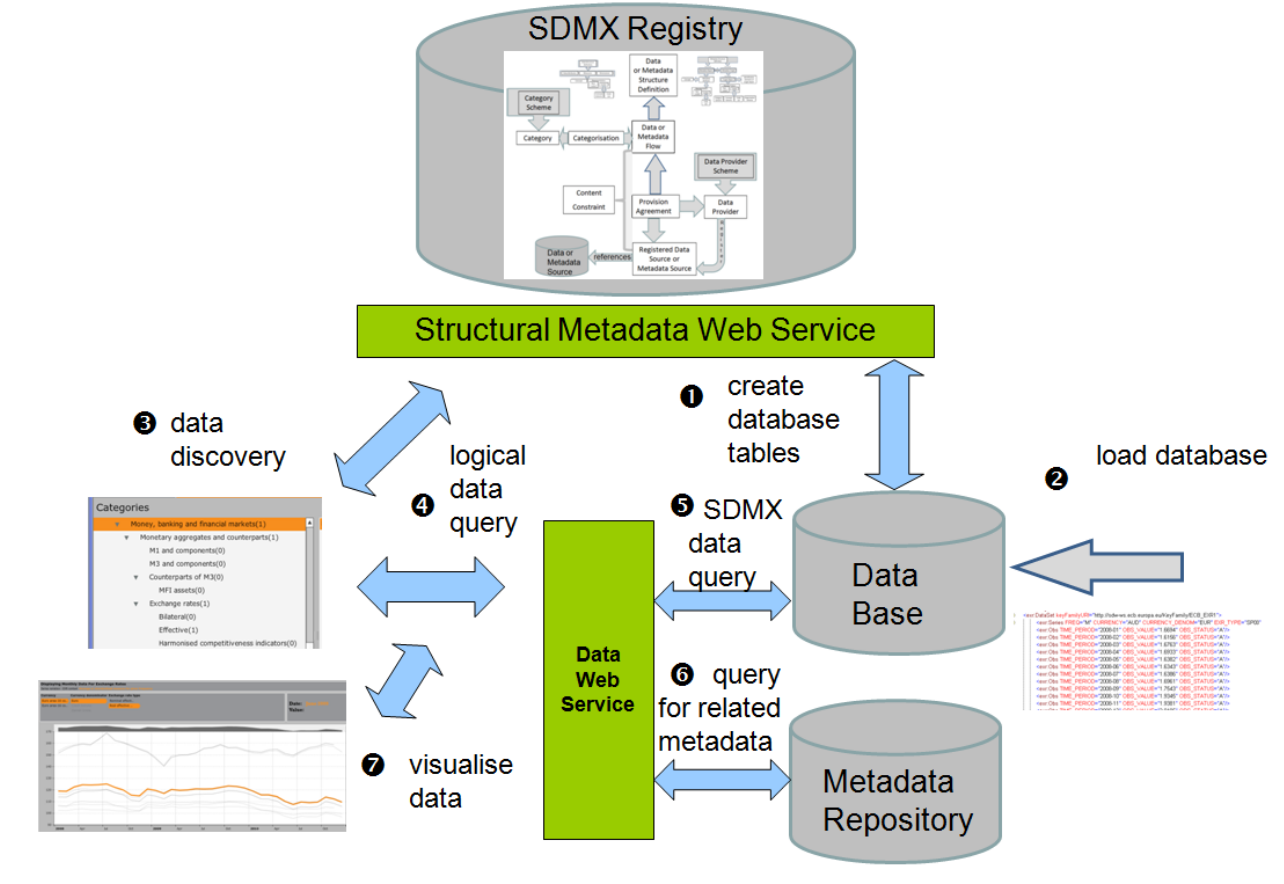

The Web Dissemination Use Case contains three actors, a

structural metadata web service (registry) that collects metadata

about statistical data in a registration fashion, a data web service

(publisher) that publishes statistical data and its metadata as

registered in the structural metadata web service, and a data

consumption application (consumer) that first discovers data from the

registry, then queries data from the corresponding publisher of

selected data, and then visualises the data.

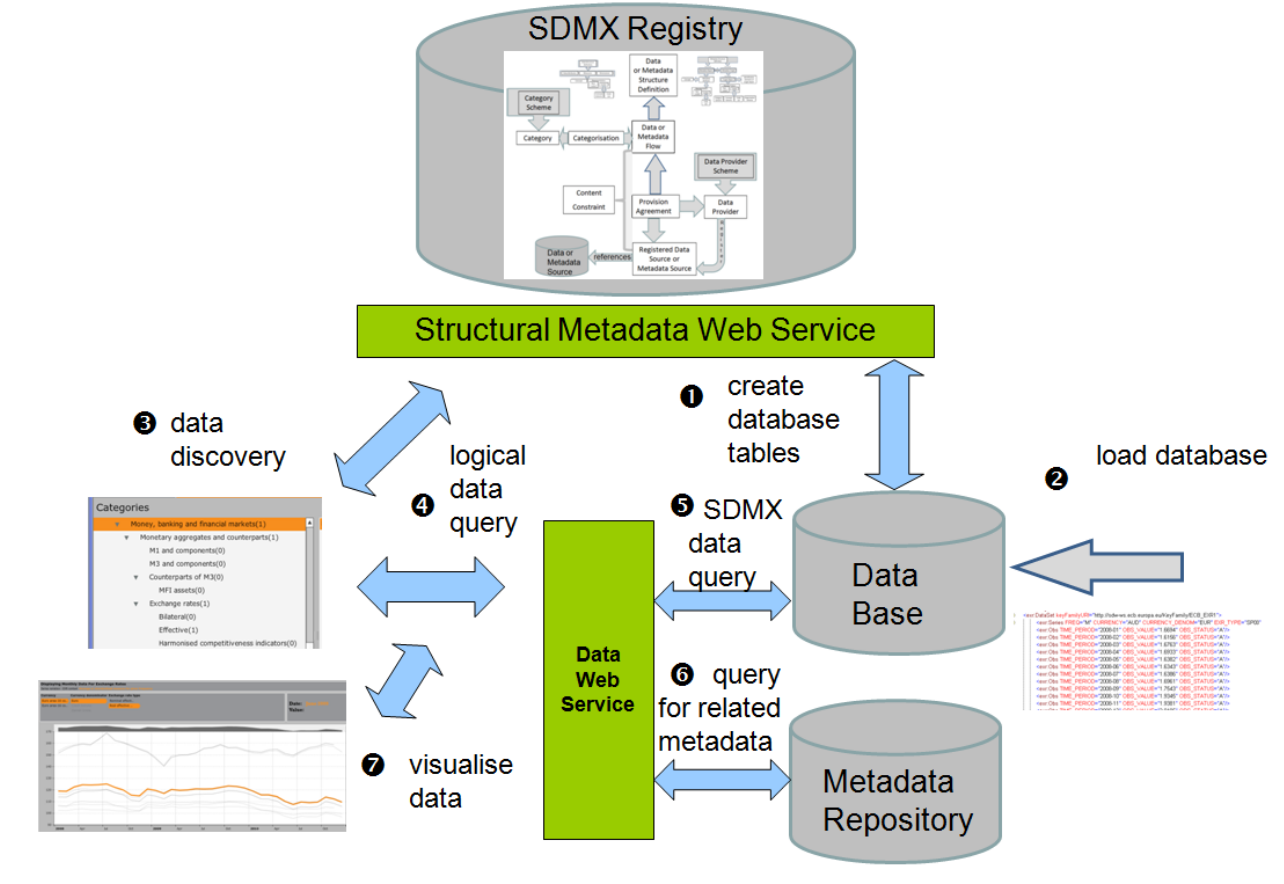

In the following, we illustrate the processes from this use case

in a flow diagram by SDMX and describe what activities are enabled in

this use case by having statistics described in a machine-readable

format.

Figure: Process flow diagram by SDMX [SDMX 2.1]

Benefits:

- A structural metadata source (registry) can collect metadata

about statistical data.

- A data web service (publisher) can register statistical data

in a registry, and can provide statistical data from a database and

metadata from a metadata repository for consumers. For that, the

publisher creates database tables (see 1 in figure), and loads

statistical data in a database and metadata in a metadata repository.

- A consumer can discover data from a registry (3) and

automatically can create a query to the publisher for selected

statistical data (4).

- The publisher can translate the query to a query to its

database (5) as well as metadata repository (6) and return the

statistical data and metadata.

- The consumer can visualise the returned statistical data and

metadata.

Requirements:

The SDMX Web Dissemination Use Case can be concretised by

several sub-use cases, detailed in the following sections.

(This use case has been summarised from Ian

Dickinson et al. [COINS])

More and more organisations want to publish statistics on the

web, for reasons such as increasing transparency and trust. Although

in the ideal case, published data can be understood by both humans and

machines, data often is simply published as CSV, PDF, XSL etc.,

lacking elaborate metadata, which makes free usage and analysis

difficult.

Therefore, the goal in this use case is to use a

machine-readable and application-independent description of common

statistics with use of open standards, to foster usage and innovation

on the published data.

In the "COINS as Linked Data" project [COINS], the Combined Online Information System

(COINS) shall be published using a standard Linked Data vocabulary.

Via the Combined Online Information System (COINS), HM

Treasury, the principal custodian of financial data for the UK

government, releases previously restricted financial information about

government spendings.

The COINS data has a hypercube structure. It describes financial

transactions using seven independent dimensions (time, data-type,

department etc.) and one dependent measure (value). Also, it allows

thirty-three attributes that may further describe each transaction.

COINS is an example of one of the more complex statistical

datasets being publishing via data.gov.uk.

Part of the complexity of COINS arises from the nature of the

data being released.

The published COINS datasets cover expenditure related to five

different years (2005–06 to 2009–10). The actual COINS database at HM

Treasury is updated daily. In principle at least, multiple snapshots

of the COINS data could be released through the year.

According to the COINS as Linked Data project, the reason for

publishing COINS as Linked Data are threefold:

- using open standard representation makes it easier to work

with the data with available technologies and promises innovative

third-party tools and usages

- individual transactions and groups of transactions are given

an identity, and so can be referenced by web address (URL), to allow

them to be discussed, annotated, or listed as source data for

articles or visualizations

- cross-links between linked-data datasets allow for much

richer exploration of related datasets

The COINS use case leads to the following challenges:

- The actual data and its hypercube structure are to be

represented separately so that an application first can examine the

structure before deciding to download the actual data, i.e., the

transactions. The hypercube structure also defines for each dimension

and attribute a range of permitted values that are to be represented.

- An access or query interface to the COINS data, e.g., via a

SPARQL endpoint or the linked data API, is planned. Queries that are

expected to be interesting are: "spending for one department", "total

spending by department", "retrieving all data for a given

observation",

- Also, the publisher favours a representation that is both as

self-descriptive as possible, i.e., others can link to and download

fully-described individual transactions and as compact as possible,

i.e., information is not unnecessarily repeated.

- Moreover, the publisher is thinking about the possible

benefit of publishing slices of the data, e.g., datasets that fix all

dimensions but the time dimension. For instance, such slices could be

particularly interesting for visualisations or comments. However,

depending on the number of Dimensions, the number of possible slices

can become large which makes it difficult to select all interesting

slices.

- An important benefit of linked data is that we are able to

annotate data, at a fine-grained level of detail, to record

information about the data itself. This includes where it came from -

the provenance of the data - but could include annotations from

reviewers, links to other useful resources, etc. Being able to trust

that data to be correct and reliable is a central value for

government-published data, so recording provenance is a key

requirement for the COINS data.

- A challenge also is the size of the data, especially since it

is updated regularly. Five data files already contain between 3.3 and

4.9 million rows of data.

Requirements::

3.3 Publisher Use Case: Publishing Excel

Spreadsheets as Linked Data

(Part of this use case has been contributed by

Rinke Hoekstra. See CEDA_R

and Data2Semantics for

more information.)

Not only in government, there is a need to publish considerable

amounts of statistical data to be consumed in various (also

unexpected) application scenarios. Typically, Microsoft Excel sheets

are made available for download. Those excel sheets contain single

spreadsheets with several multidimensional data tables, having a name

and notes, as well as column values, row values, and cell values.

Benefits:

- The goal in this use case is to to publish spreadsheet

information in a machine-readable format on the web, e.g., so that

crawlers can find spreadsheets that use a certain column value. The

published data should represent and make available for queries the

most important information in the spreadsheets, e.g., rows, columns,

and cell values.

- For instance, in the CEDA_R

and Data2Semantics

projects publishing and harmonizing Dutch historical census data

(from 1795 onwards) is a goal. These censuses are now only available

as Excel spreadsheets (obtained by data entry) that closely mimic the

way in which the data was originally published and shall be published

as Linked Data.

Challenges in this use case:

- All context and so all meaning of the measurement point is

expressed by means of dimensions. The pure number is the star of an

ego-network of attributes or dimensions. In a RDF representation it

is then easily possible to define hierarchical relationships between

the dimensions (that can be exemplified further) as well as mapping

different attributes across different value points. This way a

harmonization among variables is performed around the measurement

points themselves.

- In historical research, until now, harmonization across

datasets is performed by hand, and in subsequent iterations of a

database: it is very hard to trace back the provenance of decisions

made during the harmonization procedure.

- Combining Data Cube with SKOS [SKOS] to allow for cross-location and

cross-time historical analysis

- Novel visualisation of census data

- Integration with provenance vocabularies, e.g., PROV-O, for

tracking of harmonization steps

- These challenges may seem to be particular to the field of

historical research, but in fact apply to government information at

large. Government is not a single body that publishes information at

a single point in time. Government consists of multiple (altering)

bodies, scattered across multiple levels, jurisdictions and areas.

Publishing government information in a consistent, integrated manner

requires exactly the type of harmonization required in this use case.

- Excel sheets provide much flexibility in arranging

information. It may be necessary to limit this flexibility to allow

automatic transformation.

- There are many spreadsheets.

- Semi-structured information, e.g., notes about lineage of

data cells, may not be possible to be formalized.

Existing work:

- Another concrete example is the Stats2RDF

project that intends to publish biomedical statistical data that is

represented as Excel sheets. Here, Excel files are first translated

into CSV and then translated into RDF.

- Some of the challenges are met by the work on an ISO

Extension to SKOS [XKOS].

Requirements:

3.4 Publisher Use Case: Publishing

hierarchically structured data from StatsWales and Open Data

Communities

(Use case has been taken from [QB4OLAP] and from discussions at publishing-statistical-data

mailing list)

It often comes up in statistical data that you have some kind of

'overall' figure, which is then broken down into parts.

Example (in pseudo-turtle RDF):

ex:obs1

sdmx:refArea ;

sdmx:refPeriod "2011";

ex:population "60" .

ex:obs2

sdmx:refArea ;

sdmx:refPeriod "2011";

ex:population "50" .

ex:obs3

sdmx:refArea ;

sdmx:refPeriod "2011";

ex:population "5" .

ex:obs4

sdmx:refArea ;

sdmx:refPeriod "2011";

ex:population "3" .

ex:obs5

sdmx:refArea ;

sdmx:refPeriod "2011";

ex:population "2" .

We are looking for the best way (in the context of the RDF/Data

Cube/SDMX approach) to express that the values for the

England/Scotland/Wales/ Northern Ireland ought to add up to the value

for the UK and constitute a more detailed breakdown of the overall UK

figure? Since we might also have population figures for France,

Germany, EU27, it is not as simple as just taking a

qb:Slice

where you fix the time period and the measure.

Similarly, Etcheverry and Vaisman [QB4OLAP]

present the use case to publish household data from StatsWales and Open

Data Communities.

This multidimensional data contains for each fact a time

dimension with one level Year and a location dimension with levels

Unitary Authority, Government Office Region, Country, and ALL.

As unit, units of 1000 households is used.

In this use case, one wants to publish not only a dataset on the

bottom most level, i.e. what are the number of households at each

Unitary Authority in each year, but also a dataset on more aggregated

levels.

For instance, in order to publish a dataset with the number of

households at each Government Office Region per year, one needs to

aggregate the measure of each fact having the same Government Office

Region using the SUM function.

Importantly, one would like to maintain the relationship between

the resulting datasets, i.e., the levels and aggregation functions.

Again, this use case does not simply need a selection (or "dice"

in OLAP context) where one fixes the time period dimension.

Requirements:

3.5 Publisher Use Case: Publishing slices

of data about UK Bathing Water Quality

(Use case has been provided by Epimorphics

Ltd, in their UK

Bathing Water Quality deployment)

As part of their work with data.gov.uk and the UK Location Programme

Epimorphics Ltd have been working to pilot the publication of both

current and historic bathing water quality information from the UK Environment

Agency as Linked Data.

The UK has a number of areas, typically beaches, that are

designated as bathing waters where people routinely enter the water.

The Environment Agency monitors and reports on the quality of the

water at these bathing waters.

The Environement Agency's data can be thought of as structured

in 3 groups:

- There is basic reference data describing the bathing waters

and sampling points

- There is a data set "Annual Compliance Assessment Dataset"

giving the rating for each bathing water for each year it has been

monitored

- There is a data set "In-Season Sample Assessment Dataset"

giving the detailed weekly sampling results for each bathing water

The most important dimensions of the data are bathing water,

sampling point, and compliance classification.

Challenges:

- Observations may exhibit a number of attributes, e.g.,

whether ther was an abnormal weather exception.

- Relevant slices of both datasets are to be created:

- Annual Compliance Assessment Dataset: all the observations

for a specific sampling point, all the observations for a specific

year.

- In-Season Sample Assessment Dataset: samples for a given

sampling point, samples for a given week, samples for a given year,

samples for a given year and sampling point, latest samples for

each sampling point.

- The use case suggests more arbitrary subsets of the

observations, e.g., collecting all the "latest" observations in a

continuously updated data set.

Existing Work:

- The Semantic

Sensor Network ontology (SSN) already provides a way to publish

sensor information. SSN data provides statistical Linked Data and

grounds its data to the domain, e.g., sensors that collect

observations (e.g., sensors measuring average of temperature over

location and time).

- A number of organisations, particularly in the Climate and

Meteorological area already have some commitment to the OGC

"Observations and Measurements" (O&M) logical data model, also

published as ISO 19156.

Requirements:

3.6 Publisher Use Case: Eurostat SDMX as

Linked Data

(This use case has been taken from Eurostat Linked Data

Wrapper and Linked

Statistics Eurostat Data, both deployments for publishing Eurostat

SDMX as Linked Data using the draft version of the data cube

vocabulary)

As mentioned already, the ISO standard for exchanging and sharing

statistical data and metadata among organisations is Statistical Data

and Metadata eXchange [SDMX].

Since this standard has proven applicable in many contexts, we adopt

the multidimensional model that underlies SDMX and intend the standard

vocabulary to be compatible to SDMX.

Therefore, in this use case we intend to explain the benefit and

challenges of publishing SDMX data as Linked Data. As one of the main

adopters of SDMX, Eurostat

publishes large amounts of European statistics coming from a data

warehouse as SDMX and other formats on the web. Eurostat also provides

an interface to browse and explore the datasets. However, linking such

multidimensional data to related data sets and concepts would require

downloading of interesting datasets and manual integration.The goal

here is to improve integration with other datasets; Eurostat data

should be published on the web in a machine-readable format, possible

to be linked with other datasets, and possible to be freeley consumed

by applications. Both Eurostat

Linked Data Wrapper and Linked Statistics

Eurostat Data intend to publish Eurostat

SDMX data as 5-star Linked Open

Data. Eurostat data is partly published as SDMX, partly as tabular

data (TSV, similar to CSV). Eurostat provides a TOC

of published datasets as well as a feed of modified and new datasets.

Eurostat provides a list of used codelists, i.e., range

of permitted dimension values. Any Eurostat dataset contains a

varying set of dimensions (e.g., date, geo, obs_status, sex, unit) as

well as measures (generic value, content is specified by dataset,

e.g., GDP per capita in PPS, Total population, Employment rate by

sex).

Benefits:

- Possible implementation of ETL pipelines based on Linked Data

technologies (e.g., LDSpider)

to effectively load the data into a data warehouse for analysis

- Allows useful queries to the data, e.g., comparison of

statistical indicators across EU countries.

- Allows to attach contextual information to statistics during

the interpretation process.

- Allows to reuse single observations from the data.

- Linking to information from other data sources, e.g., for

geo-spatial dimension.

Challenges:

- New Eurostat datasets are added regularly to Eurostat. The

Linked Data representation should automatically provide access to the

most-up-to-date data.

- How to match elements of the geo-spatial dimension to

elements of other data sources, e.g., NUTS, GADM.

- There is a large number of Eurostat datasets, each possibly

containing a large number of columns (dimensions) and rows

(observations). Eurostat publishes more than 5200 datasets, which,

when converted into RDF require more than 350GB of disk space

yielding a dataspace with some 8 billion triples.

- In the Eurostat Linked Data Wrapper, there is a timeout for

transforming SDMX to Linked Data, since Google App Engine is used.

Mechanisms to reduce the amount of data that needs to be translated

would be needed.

- Provide a useful interface for browsing and visualising the

data. One problem is that the data sets have to high dimensionality

to be displayed directly. Instead, one could visualise slices of time

series data. However, for that, one would need to either fix most

other dimensions (e.g., sex) or aggregate over them (e.g., via

average). The selection of useful slices from the large number of

possible slices is a challenge.

- Each dimension used by a dataset has a range of permitted

values that need to be described.

- The Eurostat SDMX as Linked Data use case suggests to have

time lines on data aggregating over the gender dimension.

- The Eurostat SDMX as Linked Data use case suggests to provide

data on a gender level and on a level aggregating over the gender

dimension.

- Updates to the data

- Eurostat - Linked Data pulls in changes from the original

Eurostat dataset on weekly basis and conversion process runs every

Saturday at noon taking into account new datasets along with

updates to existing datasets.

- Eurostat Linked Data Wrapper on-the-fly translates Eurostat

datasets into RDF so that always the most current data is used. The

problem is only to point users towards the URIs of Eurostat

datasets: Estatwrap provides a feed of modified and new datasets.

Also, it provides a TOC

that could be automatically updated from the Eurostat

TOC.

- Query interface

-

- Eurostat - Linked Data provides SPARQL endpoint for the

metadata (not the observations).

- Eurostat Linked Data Wrapper allows and demonstrates how to

use Qcrumb.com to query the data.

- Browsing and visualising interface:

- Eurostat Linked Data Wrapper provides for each dataset an

HTML page showing a visualisation of the data.

Non-requirements:

- One possible application would run validation checks over

Eurostat data. The intended standard vocabulary is to publish the

Eurostat data as-is and is not intended to represent information for

validation (similar to business rules).

- Information of how to match elements of the geo-spatial

dimension to elements of other data sources, e.g., NUTS, GADM, is not

part of a vocabulary recommendation.

Requirements:

3.7 Publisher Use Case: Representing

relationships between statistical data

(This use case has mainly been taken from the

COINS project [COINS])

In several applications, relationships between statistical data

need to be represented.

The goal of this use case is to describe provenance,

transformations, and versioning around statistical data, so that the

history of statistics published on the web becomes clear. This may

also relate to the issue of having relationships between datasets

published.

For instance, the COINS project [COINS]

has at least four perspectives on what they mean by "COINS" data: the

abstract notion of "all of COINS", the data for a particular year, the

version of the data for a particular year released on a given date,

and the constituent graphs which hold both the authoritative data

translated from HMT's own sources. Also, additional supplementary

information which they derive from the data, for example by

cross-linking to other datasets.

Another specific use case is that the Welsh Assembly government

publishes a variety of population datasets broken down in different

ways. For many uses then population broken down by some category (e.g.

ethnicity) is expressed as a percentage. Separate datasets give the

actual counts per category and aggregate counts. In such cases it is

common to talk about the denominator (often DENOM) which is the

aggregate count against which the percentages can be interpreted.

Another example for representing relationships between statistical

data are transformations on datasets, e.g., addition of derived

measures, conversion of units, aggregations, OLAP operations, and

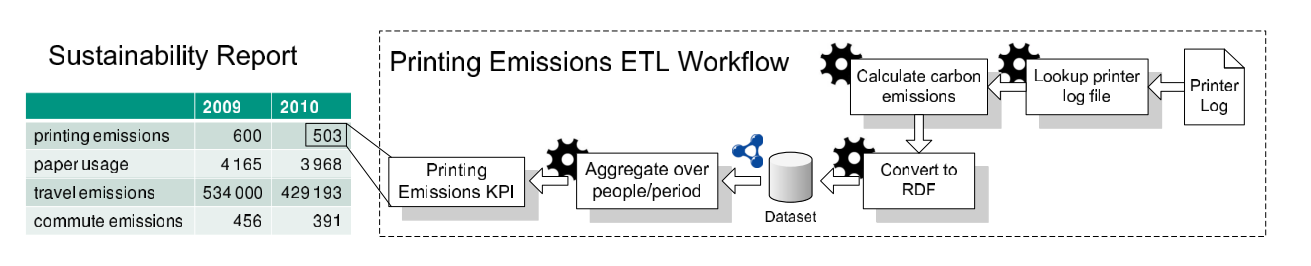

enrichment of statistical data. A concrete example is given by Freitas

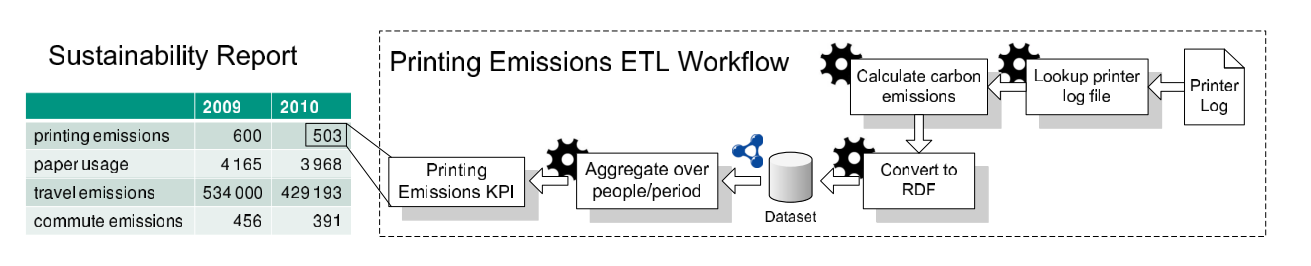

et al. [COGS] and illustrated in

the following figure.

Figure: Illustration of ETL workflows to process

statistics

Here, numbers from a sustainability report have been created by

a number of transformations to statistical data. Different numbers

(e.g., 600 for year 2009 and 503 for year 2010) might have been

created differently, leading to different reliabilities to compare

both numbers.

Benefits:

Making transparent the transformation a dataset has been exposed

to. Increases trust in the data.

Challenges:

- Operations on statistical data result in new statistical

data, depending on the operation. For instance, in terms of Data

Cube, operations such as slice, dice, roll-up, drill-down will result

in new Data Cubes. This may require representing general

relationships between cubes (as discussed in the publishing-statistical-data

mailing list).

- Should Data Cube support explicit declaration of such

relationships either between separated qb:DataSets or between

measures with a single

qb:DataSet (e.g. ex:populationCount

and ex:populationPercent)?

- If so should that be scoped to simple, common relationships

like DENOM or allow expression of arbitrary mathematical relations?

Existing Work:

- Possible relation to Versioning

part of GLD Best Practices Document, where it is specified how to

publish data which has multiple versions.

- The COGS

vocabulary [COGS] is related to

this use case since it may complement the standard vocabulary for

representing ETL pipelines processing statistics.

Requirements:

3.8 Consumer Use Case: Simple chart

visualisations of (integrated) published statistical data

(Use case taken from SMART research project)

Data that is published on the Web is typically visualized by

transforming it manually into CSV or Excel and then creating a

visualization on top of these formats using Excel, Tableau,

RapidMiner, Rattle, Weka etc.

This use case shall demonstrate how statistical data published

on the web can be with few effort visualized inside a webpage, without

using commercial or highly-complex tools.

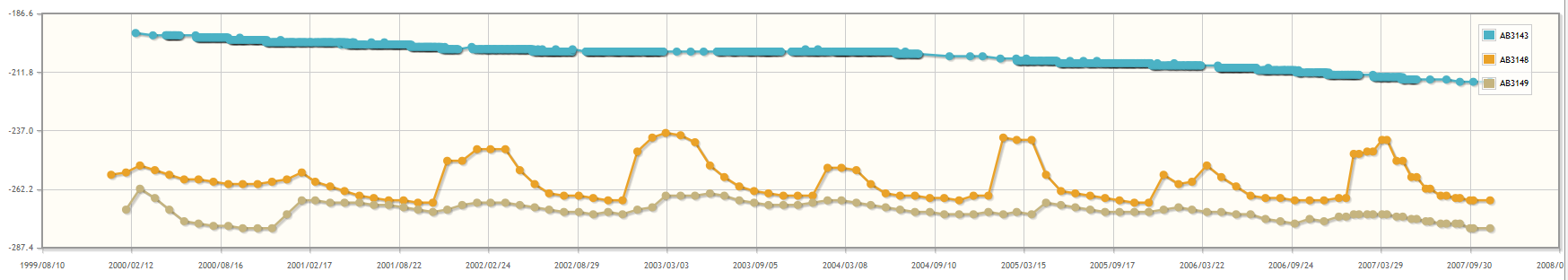

An example scenario is environmental research done within the SMART research project. Here,

statistics about environmental aspects (e.g., measurements about the

climate in the Lower Jordan Valley) shall be visualized for scientists

and decision makers. Statistics should also be possible to be

integrated and displayed together. The data is available as XML files

on the web. On a separate website, specific parts of the data shall be

queried and visualized in simple charts, e.g., line diagrams.

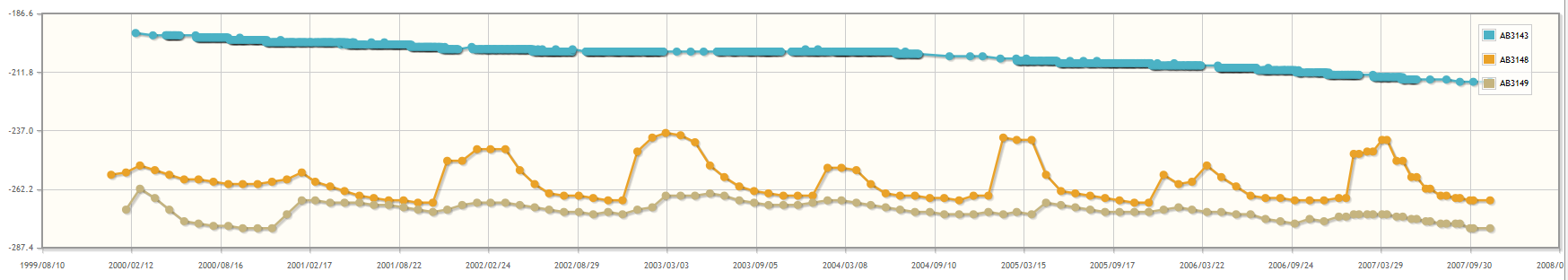

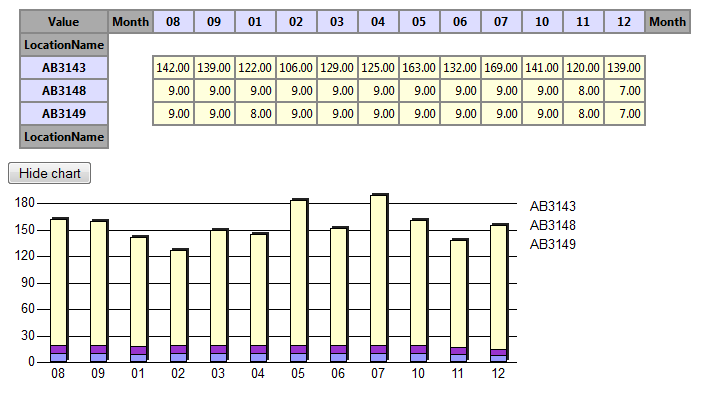

Figure: HTML embedded line chart of an

environmental measure over time for three regions in the lower Jordan

valley

Figure: Showing the same data in a pivot table.

Here, the aggregate COUNT of measures per cell is given.

Challenges of this use case are:

- The difficulties lay in structuring the data appropriately so

that the specific information can be queried.

- Also, data shall be published with having potential

integration in mind. Therefore, e.g., units of measurements need to

be represented.

- Integration becomes much more difficult if publishers use

different measures, dimensions.

Requirements:

3.9 Consumer Use Case: Visualising

published statistical data in Google Public Data Explorer

(Use case taken from Google Public Data

Explorer (GPDE))

Google Public

Data Explorer (GPDE) provides an easy possibility to visualize and

explore statistical data. Data needs to be in the Dataset

Publishing Language (DSPL) to be uploaded to the data explorer. A

DSPL dataset is a bundle that contains an XML file, the schema, and a

set of CSV files, the actual data. Google provides a tutorial to

create a DSPL dataset from your data, e.g., in CSV. This requires a

good understanding of XML, as well as a good understanding of the data

that shall be visualized and explored.

In this use case, the goal is to take statistical data published

on the web and to transform it into DSPL for visualization and

exploration with as few effort as possible.

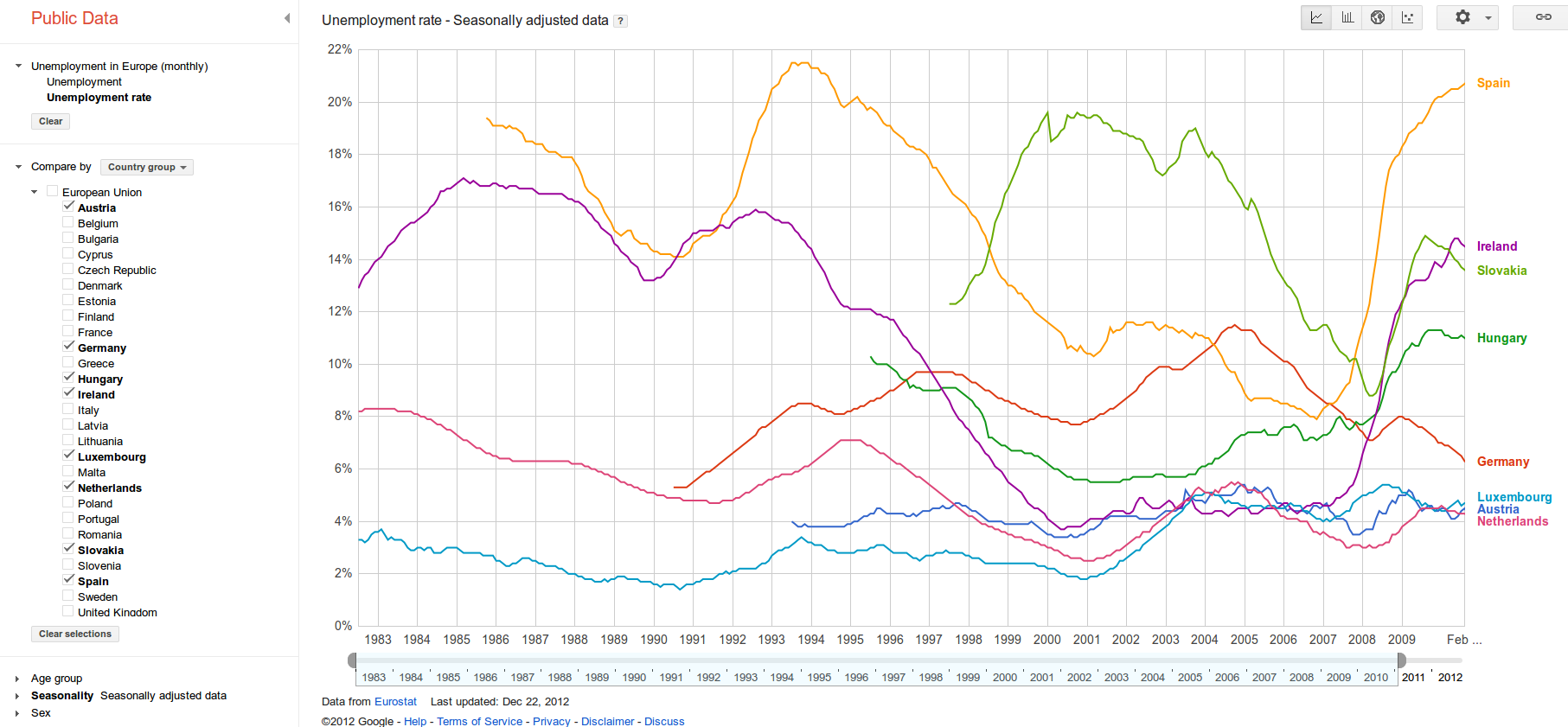

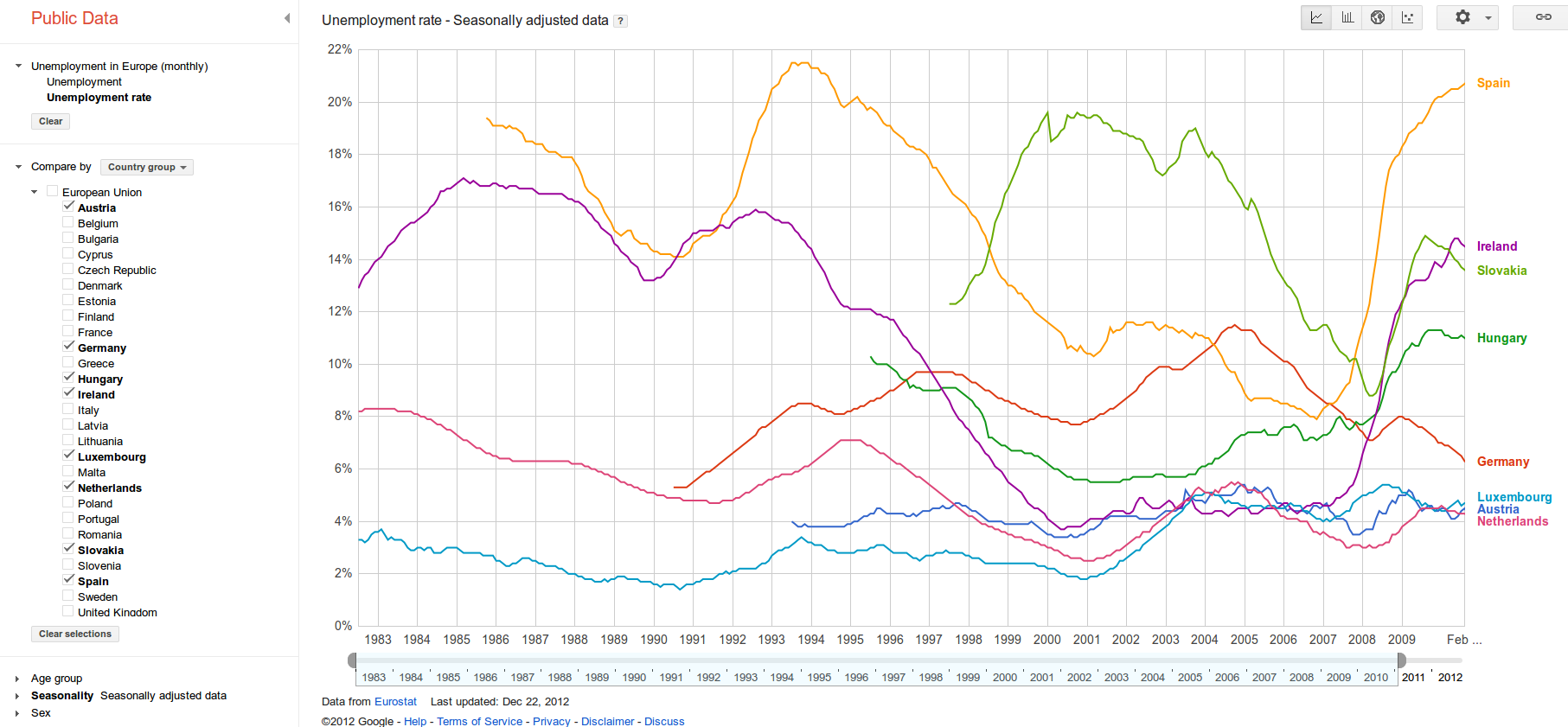

For instance, Eurostat data about Unemployment rate downloaded

from the web as shown in the following figure:

Figure: An interactive chart in GPDE for

visualising Eurostat data described with DSPL

Benefits:

- If a standard Linked Data vocabulary is used, visualising and

exploring new data that already is represented using this vocabulary

can easily be done using GPDE.

- Datasets can be first integrated using Linked Data technology

and then analysed using GDPE.

Challenges of this use case are:

- There are different possible approaches each having

advantages and disadvantages: 1) A customer C is downloading this

data into a triple store; SPARQL queries on this data can be used to

transform the data into DSPL and uploaded and visualized using GPDE.

2) or, one or more XLST transformation on the RDF/XML transforms the

data into DSPL.

- The technical challenges for the consumer here lay in knowing

where to download what data and how to get it transformed into DSPL

without knowing the data.

Non-requirements:

- DSPL is representative for using statistical data published

on the web in available tools for analysis. Similar tools that may be

automatically covered are: Weka (arff data format), Tableau, SPSS,

STATA, PC-Axis etc.

Requirements:

3.10 Consumer Use Case: Analysing published

statistical data with common OLAP systems

(Use case taken from Financial

Information Observation System (FIOS))

Online Analytical Processing (OLAP) [OLAP]

is an analysis method on multidimensional data. It is an explorative

analysis methode that allows users to interactively view the data on

different angles (rotate, select) or granularities (drill-down,

roll-up), and filter it for specific information (slice, dice).

OLAP systems that first use ETL pipelines to

Extract-Load-Transform relevant data for efficient storage and queries

in a data warehouse and then allows interfaces to issue OLAP queries

on the data are commonly used in industry to analyse statistical data

on a regular basis.

The goal in this use case is to allow analysis of published

statistical data with common OLAP systems [OLAP4LD]

For that a multidimensional model of the data needs to be

generated. A multidimensional model consists of facts summarised in

data cubes. Facts exhibit measures depending on members of dimensions.

Members of dimensions can be further structured along hierarchies of

levels.

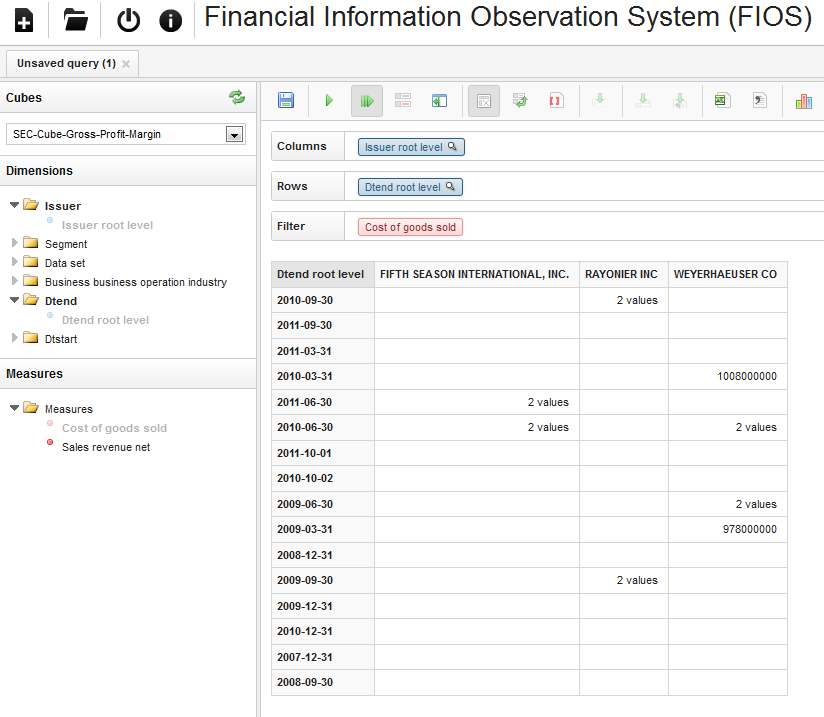

An example scenario of this use case is the Financial Information

Observation System (FIOS) [FIOS],

where XBRL data provided by the SEC on the web is to be re-published

as Linked Data and made possible to explore and analyse by

stakeholders in a web-based OLAP client Saiku.

The following figure shows an example of using FIOS. Here, for

three different companies, cost of goods sold as disclosed in XBRL

documents are analysed. As cell values either the number of

disclosures or - if only one available - the actual number in USD is

given:

Figure: Example of using FIOS for OLAP

operations on financial data

Benefits:

- OLAP operations cover typical business requirements, e.g.,

slice, dice, drill-down.

- OLAP frontends intuitive interactive, explorative, fast.

Interfaces well-known to many people in industry.

- OLAP functionality provided by many tools that may be reused

Challenges:

- ETL pipeline needs to automatically populate a data

warehouse. Common OLAP systems use relational databases with a star

schema.

- A problem lies in the strict separation between queries for

the structure of data (metadata queries), and queries for actual

aggregated values (OLAP operations).

- Another problem lies in defining Data Cubes without greater

insight in the data beforehand.

- Depending on the expressivity of the OLAP queries (e.g.,

aggregation functions, hierarchies, ordering), performance plays an

important role.

- Olap systems have to cater for possibly missing information

(e.g., the aggregation function or a human readable label).

Requirements:

3.11 Registry Use Case: Registering

published statistical data in data catalogs

(Use case motivated by Data Catalog vocabulary)

After statistics have been published as Linked Data, the question

remains how to communicate the publication and let users discover the

statistics. There are catalogs to register datasets, e.g., CKAN, datacite.org, da|ra, and Pangea. Those catalogs require specific

configurations to register statistical data.

The goal of this use case is to demonstrate how to expose and

distribute statistics after publication. For instance, to allow

automatic registration of statistical data in such catalogs, for

finding and evaluating datasets. To solve this issue, it should be

possible to transform the published statistical data into formats that

can be used by data catalogs.

A concrete use case is the structured collection of RDF Data Cube

Vocabulary datasets in the PlanetData Wiki. This list is supposed to

describe statistical datasets on a higher level - for easy discovery

and selection - and to provide a useful overview of RDF Data Cube

deployments in the Linked Data cloud.

Unanticipated Uses:

- If data catalogs contain statistics, they do not expose those

using Linked Data but for instance using CSV or HTML (e.g., Pangea).

It could also be a use case to publish such data using the data cube

vocabulary.

Existing Work:

- The Data

Catalog vocabulary (DCAT) is strongly related to this use case since

it may complement the standard vocabulary for representing statistics

in the case of registering data in a data catalog.

Requirements:

4. Requirements

The use cases presented in the previous section give rise to the

following requirements for a standard representation of statistics.

Requirements are cross-linked with the use cases that motivate them.

The draft version of the vocabulary builds upon SDMX Standards Version 2.0. A

newer version of SDMX, SDMX

Standards, Version 2.1, is available.

The requirement is to at least build upon Version 2.0, if

specific use cases derived from Version 2.1 become available, the

working group may consider building upon Version 2.1.

Background information:

Required by:

4.2 Vocabulary should clarify the use of

subsets of observations

There should be a consensus on the issue of flattening or

abbreviating data; one suggestion is to author data without the

duplication, but have the data publication tools "flatten" the compact

representation into standalone observations during the publication

process.

Background information:

Required by:

4.3 Vocabulary should recommend a mechanism

to support hierarchical code lists

First, hierarchical code lists may be supported via SKOS [SKOS]. Allow for cross-location and cross-time

analysis of statistical datasets.

Second, one can think of non-SKOS hierarchical code lists. E.g., if

simple

skos:narrower

/

skos:broader

relationships are not sufficient or if a vocabulary uses specific

hierarchical properties, e.g.,

geo:containedIn

.

Also, the use of hierarchy levels needs to be clarified. It has been

suggested, to allow

skos:Collections

as value of

qb:codeList

.

Background information:

Required by:

4.4 Vocabulary should define relationship

to ISO19156 - Observations & Measurements

An number of organisations, particularly in the Climate and

Meteorological area already have some commitment to the OGC

"Observations and Measurements" (O&M) logical data model, also

published as ISO 19156. Are there any statements about compatibility

and interoperability between O&M and Data Cube that can be made to

give guidance to such organisations?

Background information:

Required by:

4.5 There should be a recommended mechanism

to allow for publication of aggregates which cross multiple dimensions

Background information:

Required by:

4.6 There should be a recommended way of

declaring relations between cubes

Background information:

Required by:

Background information:

Required by:

4.8 There should be mechanisms and

recommendations regarding publication and consumption of large amounts

of statistical data

Background information:

Required by:

4.9 There should be a recommended way to

communicate the availability of published statistical data to external

parties and to allow automatic discovery of statistical data

Clarify the relationship between DCAT and QB.

Background information:

Required by:

A. Acknowledgements

We thank John Erickson, Rinke Hoekstra, Bernadette Hyland, Aftab

Iqbal, Dave Reynolds, Biplav Srivastava, Villazón-Terrazas for

feedback and input.

References

- [COG]

-

SDMX Content Oriented Guidelines, http://sdmx.org/?page_id=11

- [COGS]

-

Freitas, A., Kämpgen, B., Oliveira, J. G., O'Riain, S., & Curry,

E. (2012). Representing Interoperable Provenance Descriptions for ETL

Workflows. ESWC 2012 Workshop Highlights (pp. 1-15). Springer Verlag,

2012 (in press). (Extended Paper published in Conf. Proceedings.). http://andrefreitas.org/papers/preprint_provenance_ETL_workflow_eswc_highlights.pdf.

- [COINS]

-

Ian Dickinson et al., COINS as Linked Data http://data.gov.uk/resources/coins,

last visited on Jan 9 2013

- [FIOS]

-

Andreas Harth, Sean O'Riain, Benedikt Kämpgen. Submission XBRL

Challenge 2011. http://xbrl.us/research/appdev/Pages/275.aspx.

- [FOWLER97]

- Fowler, Martin (1997). Analysis Patterns: Reusable Object

Models. Addison-Wesley. ISBN 0201895420.

- [LOD]

-

Linked Data, http://linkeddata.org/

- [OLAP]

-

Online Analytical Processing Data Cubes, http://en.wikipedia.org/wiki/OLAP_cube

- [OLAP4LD]

-

Kämpgen, B. and Harth, A. (2011). Transforming Statistical Linked

Data for Use in OLAP Systems. I-Semantics 2011. http://www.aifb.kit.edu/web/Inproceedings3211

- [QB-2010]

-

RDF Data Cube vocabulary, http://publishing-statistical-data.googlecode.com/svn/trunk/specs/src/main/html/cube.html

- [QB-2013]

-

RDF Data Cube vocabulary, http://www.w3.org/TR/vocab-data-cube/

- [QB4OLAP]

-

Etcheverry, Vaismann. QB4OLAP : A New Vocabulary for OLAP Cubes on

the Semantic Web. http://publishing-multidimensional-data.googlecode.com/git/index.html

- [RDF]

-

Resource Description Framework, http://www.w3.org/RDF/

- [SCOVO]

-

The Statistical Core Vocabulary, http://sw.joanneum.at/scovo/schema.html

SCOVO: Using Statistics on the Web of data, http://sw-app.org/pub/eswc09-inuse-scovo.pdf

- [SKOS]

-

Simple Knowledge Organization System, http://www.w3.org/2004/02/skos/

- [SMDX]

-

SMDX - SDMX User Guide Version 2009.1, http://sdmx.org/wp-content/uploads/2009/02/sdmx-userguide-version2009-1-71.pdf,

last visited Jan 8 2013.

- [SMDX 2.1]

-

SDMX 2.1 User Guide Version. Version 0.1 - 19/09/2012. http://sdmx.org/wp-content/uploads/2012/11/SDMX_2-1_User_Guide_draft_0-1.pdf.

last visited on 8 Jan 2013.

- [XKOS]

-

Extended Knowledge Organization System (XKOS), https://github.com/linked-statistics/xkos