Proposal: Media Capture and Streams Settings API v6

Editor's Draft 12 December 2012

- Author:

- Travis Leithead, Microsoft

Copyright © 2012 W3C® (MIT, ERCIM, Keio), All Rights Reserved. W3C liability, trademark and document use rules apply.

Copyright © 2012 W3C® (MIT, ERCIM, Keio), All Rights Reserved. W3C liability, trademark and document use rules apply.

This proposal describes additions and suggested changes to the Media Capture and Streams specification in order to support the goal of device settings retrieval and modification. This proposal (v6) incorporates feedback from the public-media-capture mailing list on the Settings v5 proposal. The v5 proposal builds on four prior proposals with the same goal [v4] [v3] [v2] [v1].

LocalMediaStream interfaceFor those of you who have been following along, this section introduces you to some of the changes from the last version.

For any of you just joining us, feel free to skip on down to the next section.

As I was looking at source objects in V5, and starting to rationalize what properties of the source should go on the track, vs. on the source object, I got the notion that the source object really wasn't providing much value aside from a logical separation for properties of the track vs. source. From our last telecon, it was apparent that most settings needed to be on the tracks as state-full information about the track. So, then what was left on the source?

EKR's comments about wondering what happens when multiple apps (or tabs within a browser) go to access and manipulate a source also resonated with me. He proposed that this either be not allowed (exclusive locks on devices by apps), or that this be better defined somehow.

In thinking about this and wanting to have a better answer than the exclusive lock route, it occurred to me that when choosing to grant a second app access to the same device, we might offer more than one choice. One choice that we've assumed so far, is to share one device among two apps with either app having the ability to modify the devices' settings. Another option that I explore in this proposal is the concept of granting a read-only version of the device. There may be a primary owner in another app, or simply in another track instance that can change the settings, and the other track(s) can see and observe the changes, but cannot apply any changes of their own.

In also thinking about allowing media other than strictly cameras and microphones with getUserMedia, such as a video from the user's hard drive, or an audio file, or even just a static image, it was apparent that sometimes the source for a track might be read-only anyway--you wouldn't be allowed to adjust the meta-data of a video streaming from the user's hard drive anyway.

So the "read-only" media source concept was born.

The set of source objects was now starting to grow. I could foresee it being difficult to rationalize/manage these objects, their purpose and/or properties into the future, and I as thought about all of these points together, it became clear that having an explicit object defined for various source devices/things was unnecessary overhead and complication.

As such, the source objects that came into existence in the v4 proposal as track sub-types, and were changed in v5 to be objects tied to tracks, are now gone. Instead, track sources have been simplified into a single string identifier on a track, which allows the app to understand how access to various things about a track behave given a certain type of source (or no source).

In order to clarify the track's behavior under various source types, I also had to get crisp about the things called "settings" and the things called "constraints" and how they all work together. I think this proposal gets it right, and provides the right APIs for applications to manipulate what they want to in an easy to rationalize manner.

And rather unfortunately (due to the name of the proposal), I've removed all notion of the term "settings" from this proposal. The things previously called settings were a combination of constraints and capabilities, and now I've just formalized on the latter and given up on the former. It works--especially with long-lasting constraints and introspection of them.

This proposal establishes the following definitions that I hope are used consistently throughout. (If not please let me know...)

Individual sources have five basic modes that are not directly exposed to an application via any API defined in this spec. The modes are described in this spec for clarification purposes only:

| Source's Mode | Details |

|---|---|

| unknown-authorization | The source hasn't yet been authorized for use by the application. (Authorization occurs via the getUserMedia API.) All sources start out in this mode at the start of the application (though trusted hardware or software environments may automatically pre-authorize certain sources when their use is requested via getUserMedia). Camera or microphone sources that are visible to the user agent can make their existence known to the application in this mode. Other sources like files on the local file system do not. |

| armed | the source has been granted use by the application and is on/ready, but not actively broadcasting any media. This can be the case if a camera source has been authorized, but there are no sinks connected to this source (so no reason to be emitting media yet). Implementations of this specification are advised to include some indicator that a device is armed in their UI so that users are aware that an application may start the source at any time. A conservative user agent would enable some form of UI to show the source as "on" in this mode. |

| streaming | The source has been granted use by the application and is actively streaming media. User agents should provide an indicator to the user that the source is on and streaming in this mode. |

| not-authorized | This source has been forbidden/rejected by the user. |

| off | The source has been turned off, but is still detectable (its existence can still be confirmed) by the application. |

In addition to these modes, a source can be removed (physically in the case camera/microphone sources, or deleted in the case of a file from the local file system), in which case it is no longer detectable by the application.

The user must remain in control of the source at all times and can cause any state-machine mode transition.

Some sources have an identifier which must be unique to the application (un-guessable by another application) and persistent between application sessions (e.g., the identifier for a given source device/application must stay the same, but not be guessable by another application). Sources that must have an identifier are camera and microphone sources; local file sources are not required to have an identifier. Source identifiers let the application save, identify the availability of, and directly request specific sources.

Other than the identifier, other bits of source identify are never directly available to the application until the user agent connects a source to a track. Once a source has been "released" to the application (either via a permissions UI, pre-configured allow-list, or some other release mechanism) the application will be able discover additional source-specific capabilities.

Sources have capabilities and state. The capabilities and state are "owned" by the source and are common to any [multiple] tracks that happen to be using the same source (e.g., if two different tracks objects bound to the same source ask for the same capability or state information, they will get back the same answer).

Sources do not have constraints--tracks have constraints. When a source is connected to a track, it must conform to the constraints present on that track (or set of tracks).

Sources will be released (un-attached) from a track when the track is ended for any reason.

On the track object, sources are represented by a sourceType attribute. The behavior of APIs associated with the

source's capabilities and state change depending on the source type.

A source's state can change dynamically over time due to environmental conditions, sink configurations, or constraint changes. A source's state must always conform to the current set of mandatory constraints that [each of] the tracks it is bound to have defined, and should do its best to conform to the set of optional constraints specified.

A source's state is directly exposed to audio and video track objects through individual read-only attributes. These attributes share the same name as their corresponding capabilities and constraints.

Events are available that signal to the application that source state has changed.

A conforming user-agent must support all the state names defined in this spec.

The values of the supported capabilities must be normalized to the ranges and enumerated types defined in this specification.

Capabilities return the same underlying per-source capabilities, regardless of any user-supplied constraints present on the source (capabilities are independent of constraints).

Source capabilities are effectively constant. Applications should be able to depend on a specific source having the same capabilities for any session.

Constraints may be optional or mandatory. Optional constraints are represented by an ordered list, mandatory constraints are an unordered set. The order of the optional constraints is from most important (at the head of the list) to least important (at the tail of the list).

Constraints are stored on the track object, not the source. Each track can be optionally initialized with constraints, or constraints can be added afterward through the constraint APIs defined in this spec.

Applying track level constraints to a source is conditional based on the type of source. For example, read-only sources will ignore any specified constraints on the track.

It is possible for two tracks that share a unique source to apply contradictory constraints. Under such contradictions, the implementation may be forced to transition to the source to the "armed" state until the conflict is resolved.

Events are available that allow the application to know when constraints cannot be met by the user agent. These typically occur when the application applies constraints beyond the capability of a source, contradictory constraints, or in some cases when a source cannot sustain itself in over-constrained scenarios (overheating, etc.).

Constraints that are intended for video sources will be ignored by audio sources and vice-versa. Similarly, constraints that are not recognized will be preserved in the constraint structure, but ignored by the application. This will allow future constraints to be defined in a backward compatible manner.

A correspondingly-named constraint exists for each corresponding source state name and capability name.

In general, user agents will have more flexibility to optimize the media streaming experience the fewer constraints are applied.

With proposed changes to

getUserMedia to support a synchronous API, this proposal enables developer code to

directly create [derived] MediaStreamTracks and initialize them with [optional] constraints. It also

adds the concept of the "new" readyState for tracks, a state which signifies that the track

is not connected to a source [yet].

Below is the track hierarchy: new video and audio media streams are defined to inherit from MediaStreamTrack. The factoring into

derived track types allows for state to be conveniently split onto the objects for which they make sense.

This section describes the MediaStreamTrack interface (currently in the Media Capture and Streams document), but makes targeted changes in order

to add the "new" state and associated event handler (onstarted). The definition is otherwise identical to the current definition except that the defined

constants are replaced by strings (using an enumerated type).

MediaStreamTrack interfaceinterface MediaStreamTrack : EventTarget {

attribute DOMString id;

readonly attribute DOMString kind;

readonly attribute DOMString label;

attribute boolean enabled;

readonly attribute TrackReadyStateEnum readyState;

attribute EventHandler onstarted;

attribute EventHandler onmute;

attribute EventHandler onunmute;

attribute EventHandler onended;

};id of type DOMStringMediaStream's

getTrackById. (This is a preliminary definition, but is expected in the latest editor's draft soon.)

kind of type DOMString, readonlyIssue: Is this attribute really necessary anymore? Perhaps we should drop it since application code will directly create tracks from derived constructors: VideoStreamTrack and AudioStreamTrack?

label of type DOMString, readonlyenabled of type booleanreadyState of type TrackReadyStateEnum, readonly"new" state after being instantiated.

State transitions are as follows:

stop() API). No source object is attached.onstarted of type EventHandler"started" event. The "started" event is fired when this track transitions

from the "new" readyState to any other state. This event fires before any other corresponding events like "ended"

or "statechanged".

Recommendation: We should add a convenience API to MediaStream for being notified of various track changes

like this one. The event would contain a reference to the track, as well as the name of the event that happened. Such a convenience API would

fire last in the sequence of such events.

onmute of type EventHandleronunmute of type EventHandleronended of type EventHandlerTo support the above readyState changes, the following enumeration is defined:

enum TrackReadyStateEnum {

"new",

"live",

"muted",

"ended"

};| Enumeration description | |

|---|---|

new | The track type is new and has not been initialized (connected to a source of any kind). This state implies that the track's label will be the empty string. |

live | See the definition of the LIVE constant in the current editor's draft. |

muted | See the definition of the MUTED constant in the current editor's draft. In addition, in this specification the "muted"

state can be entered when a track becomes over-constrained.

|

ended | See the definition of the ENDED constant in the current editor's draft. In this specification, once a track enters this state it never exits it. |

partial interface MediaStreamTrack {

readonly attribute SourceTypeEnum sourceType;

readonly attribute DOMString sourceId;

void stop ();

};sourceType of type SourceTypeEnum, readonlysourceId of type DOMString, readonlystopvoidThe sourceType attribute may have the following states:

enum SourceTypeEnum {

"none",

"camera",

"microphone",

"photo-camera",

"readonly",

"remote"

};| Enumeration description | |

|---|---|

none | This track has no source. This is the case when the track is in the "new" or "ended" readyState. |

camera | A valid source type only for VideoStreamTracks. The source is a local video-producing camera source (without special photo-mode support). |

microphone | A valid source type only for AudioStreamTracks. The source is a local audio-producing microphone source. |

photo-camera | A valid source type only for VideoStreamTracks. The source is a local video-producing camera source which supports high-resolution photo-mode and its related state attributes. |

readonly | The track (audio or video) is backed by a read-only source such as a file, or the track source is a local microphone or camera, but is shared so that this track cannot modify any of the source's settings. |

remote | The track is sourced by an RTCPeerConnection. |

The MediaStreamTrack object cannot be instantiated directly. To create an instance of a MediaStreamTrack, one of its derived track types may be instantiated. These derived types are defined in this section.

It's important to note that the camera's green light

doesn't come on when a new track is created; nor does the user get

prompted to enable the camera/microphone. Those actions only happen after the developer has requested that a media stream containing

"new" tracks be bound to a source via getUserMedia. Until that point tracks are inert.

VideoStreamTrack interfaceVideo tracks may be instantiated with optional media track constraints. These constraints can be later modified on the track as needed by the application, or created after-the-fact if the initial constraints are unknown to the application.

Example: VideoStreamTrack objects are instantiated in JavaScript using the new operator:

new VideoStreamTrack();

or

new VideoStreamTrack( { optional: [ { sourceId: "20983-20o198-109283-098-09812" }, { width: { min: 800, max: 1200 }}, { height: { min: 600 }}] });

[Constructor(optional MediaTrackConstraints videoConstraints)]

interface VideoStreamTrack : MediaStreamTrack {

static sequence<DOMString> getSourceIds ();

void takePhoto ();

attribute EventHandler onphoto;

attribute EventHandler onphotoerror;

};onphoto of type EventHandlerThe BlobEvent returns a photo (as a Blob) in a compressed format (for example: PNG/JPEG) rather than a raw ImageData object due to the expected large, uncompressed size of the resulting photos.

onphotoerror of type EventHandlergetSourceIds, static"camera",

"photo-camera", and if allowed by the user-agent, "readonly" variants of the former two types. The video source ids returned in the

list constitute those sources that the user agent can identify at the time the API is called (the list can grow/shrink over time as sources may be added or

removed). As a static method, getSourceIds can be queried without instantiating any VideoStreamTrack objects or without calling getUserMedia.

Issue: This information deliberately adds to the fingerprinting surface of the UA. However, this information

will not be identifiable outside the scope of this application. could also be obtained via other round-about techniques using getUserMedia. This editor deems it worthwhile directly providing

this data as it seems important for determining whether multiple devices of this type are available.

sequence<DOMString>takePhoto"photo-camera", this method returns immediately and does nothing.

If the sourceType is "photo-camera", then this method temporarily (asynchronously) switches the source into "high

resolution photo mode", applies the configured photoWidth, photoHeight, exposureMode, and isoMode state

to the stream, and records/encodes an image (using a user-agent determined format) into a Blob object. Finally, a task is

queued to fire a "photo" event with the resulting recorded/encoded data. In case of a failure for any reason, a "photoerror" event

is queued instead and no "photo" event is dispatched.

Issue: We could consider providing a hint or setting for the desired photo format? There could be some alignment opportunity with the Recoding proposal...

voidAudioStreamTrack interfaceExample: AudioStreamTrack objects are instantiated in JavaScript using the new operator:

new AudioStreamTrack();

or

new AudioStreamTrack( { optional: [ { sourceId: "64815-wi3c89-1839dk-x82-392aa" }, { gain: 0.5 }] });

[Constructor]

interface AudioStreamTrack : MediaStreamTrack {

static sequence<DOMString> getSourceIds ();

};getSourceIds, staticgetSourceIds on the VideoStreamTrack object. Note, that the list of source ids for AudioStreamTrack will be populated

only with local sources whose sourceType is "microphone", and if allowed by the user-agent, "readonly" microphone variants.

sequence<DOMString>Source states (the current states of the source media flowing through a track) are observable by the attributes defined in this section. They are divided by track type: video and audio.

Note that the source states defined in this section do not include sourceType and sourceId merely because they were already defined earlier. These two attributes are also considered states, and have appropriate visibility as capabilities and constraints.

This table summarizes the expected values of the video source state attributes for each of the sourceTypes defined earlier:

sourceType |

"none" | "camera" | "photo-camera" | "readonly" | "remote" |

|---|---|---|---|---|---|

sourceType |

current SourceTypeEnum value |

current SourceTypeEnum value |

current SourceTypeEnum value |

current SourceTypeEnum value |

current SourceTypeEnum value |

sourceId |

null | current DOMString value |

current DOMString value |

current DOMString value |

null |

width |

null | current unsigned long value |

current unsigned long value |

current unsigned long value |

current unsigned long value |

height |

null | current unsigned long value |

current unsigned long value |

current unsigned long value |

current unsigned long value |

frameRate |

null | current float value |

current float value |

current float value |

current float value |

facingMode |

null | current VideoFacingModeEnum value | current VideoFacingModeEnum value | current VideoFacingModeEnum value | null |

zoom |

null | current float value |

current float value |

current float value |

null |

focusMode |

null | current VideoFocusModeEnum value | current VideoFocusModeEnum value | current VideoFocusModeEnum value | null |

fillLightMode |

null | current VideoFillLightModeEnum value | current VideoFillLightModeEnum value | current VideoFillLightModeEnum value | null |

whiteBalanceMode |

null | current VideoWhiteBalanceModeEnum value | current VideoWhiteBalanceModeEnum value | current VideoWhiteBalanceModeEnum value | null |

brightness |

null | current unsigned long value |

current unsigned long value |

current unsigned long value |

null |

contrast |

null | current unsigned long value |

current unsigned long value |

current unsigned long value |

null |

saturation |

null | current unsigned long value |

current unsigned long value |

current unsigned long value |

null |

sharpness |

null | current unsigned long value |

current unsigned long value |

current unsigned long value |

null |

photoWidth |

null | null | configured unsigned long value |

configured unsigned long value (if readonly source is a photo-camera), null otherwise. |

null |

photoHeight |

null | null | configured unsigned long value |

configured unsigned long value (if readonly source is a photo-camera), null otherwise. |

null |

exposureMode |

null | null | configured PhotoExposureModeEnum value | configured PhotoExposureModeEnum value (if readonly source is a photo-camera), null otherwise. |

null |

isoMode |

null | null | configured PhotoISOModeEnum value | configured PhotoISOModeEnum value (if readonly source is a photo-camera), null otherwise. |

null |

partial interface VideoStreamTrack {

readonly attribute unsigned long? width;

readonly attribute unsigned long? height;

readonly attribute float? frameRate;

readonly attribute VideoFacingModeEnum? facingMode;

readonly attribute float? zoom;

readonly attribute VideoFocusModeEnum? focusMode;

readonly attribute VideoFillLightModeEnum? fillLightMode;

readonly attribute VideoWhiteBalanceModeEnum? whiteBalanceMode;

readonly attribute unsigned long? brightness;

readonly attribute unsigned long? contrast;

readonly attribute unsigned long? saturation;

readonly attribute unsigned long? sharpness;

readonly attribute unsigned long? photoWidth;

readonly attribute unsigned long? photoHeight;

readonly attribute PhotoExposureModeEnum? exposureMode;

readonly attribute PhotoISOModeEnum? isoMode;

};width of type unsigned long, readonly, nullableheight of type unsigned long, readonly, nullableframeRate of type float, readonly, nullableIf the sourceType is a "camera" or "photo-camera", or a "readonly" variant of those,

and the source does not provide a frameRate (or the frameRate cannot be determined from the source stream), then this attribute

must be the user agent's vsync display rate.

facingMode of type VideoFacingModeEnum, readonly, nullablezoom of type float, readonly, nullableIf the sourceType is a "camera" or "photo-camera", or a "readonly" variant of those,

and the source does not support changing the zoom factor, then this attribute must always return the value 1.0.

focusMode of type VideoFocusModeEnum, readonly, nullablefillLightMode of type VideoFillLightModeEnum, readonly, nullablewhiteBalanceMode of type VideoWhiteBalanceModeEnum, readonly, nullablebrightness of type unsigned long, readonly, nullableIf the sourceType is a "camera" or "photo-camera", or a "readonly" variant of those,

and the source does not provide brightness level information, then this attribute must always return the value 50.

contrast of type unsigned long, readonly, nullableIf the sourceType is a "camera" or "photo-camera", or a "readonly" variant of those,

and the source does not provide contrast level information, then this attribute must always return the value 50.

saturation of type unsigned long, readonly, nullableIf the sourceType is a "camera" or "photo-camera", or a "readonly" variant of those,

and the source does not provide saturation level information, then this attribute must always return the value 50.

sharpness of type unsigned long, readonly, nullableIf the sourceType is a "camera" or "photo-camera", or a "readonly" variant of those,

and the source does not provide sharpness level information, then this attribute must always return the value 50.

photoWidth of type unsigned long, readonly, nullable"photo-camera" (or "readonly" variant) high-resolution sensor.photoHeight of type unsigned long, readonly, nullable"photo-camera" (or "readonly" variant) high-resolution sensor.exposureMode of type PhotoExposureModeEnum, readonly, nullable"photo-camera" (or "readonly" variant) light meter.isoMode of type PhotoISOModeEnum, readonly, nullable"photo-camera" (or "readonly" variant) film-equivalent speed (ISO) setting.VideoFacingModeEnum enumeration

enum VideoFacingModeEnum {

"notavailable",

"user",

"environment"

};| Enumeration description | |

|---|---|

notavailable | The relative directionality of the source cannot be determined by the user agent based on the hardware. |

user | The source is facing toward the user (a self-view camera). |

environment | The source is facing away from the user (viewing the environment). |

VideoFocusModeEnum enumeration

enum VideoFocusModeEnum {

"notavailable",

"auto",

"manual"

};| Enumeration description | |

|---|---|

notavailable | This source does not have an option to change focus modes. |

auto | The source auto-focuses. |

manual | The source must be manually focused. |

VideoFillLightModeEnum enumeration

enum VideoFillLightModeEnum {

"notavailable",

"auto",

"off",

"flash",

"on"

};| Enumeration description | |

|---|---|

notavailable | This source does not have an option to change fill light modes (e.g., the camera does not have a flash). |

auto | The video device's fill light will be enabled when required (typically low light conditions). Otherwise it will be

off. Note that auto does not guarantee that a flash will fire when takePhoto is called.

Use flash to guarantee firing of the flash for the takePhoto API. auto is the initial value.

|

off | The source's fill light and/or flash will not be used. |

flash | If the track's sourceType is "photo-camera", this value will always cause the flash to fire

for the takePhoto API. Otherwise, for other supporting sourceTypes, this value is equivalent

to auto.

|

on | The source's fill light will be turned on (and remain on) while the source is in either "armed" or "streaming" mode.

|

VideoWhiteBalanceModeEnum enumeration

enum VideoWhiteBalanceModeEnum {

"notavailable",

"auto",

"incandescent",

"cool-fluorescent",

"warm-fluorescent",

"daylight",

"cloudy",

"twilight",

"shade"

};| Enumeration description | |

|---|---|

notavailable | The white-balance information is not available from this source. |

auto | The white-balance is configured to automatically adjust. |

incandescent | Adjust the white-balance between 2500 and 3500 Kelvin |

cool-fluorescent | Adjust the white-balance between 4000 and 5000 Kelvin |

warm-fluorescent | Adjust the white-balance between 5000 and 6000 Kelvin |

daylight | Adjust the white-balance between 5000 and 6500 Kelvin |

cloudy | Adjust the white-balance between 6500 and 8000 Kelvin |

twilight | Adjust the white-balance between 8000 and 9000 Kelvin |

shade | Adjust the white-balance between 9000 and 10,000 Kelvin |

PhotoExposureModeEnum enumeration

enum PhotoExposureModeEnum {

"notavailable",

"auto",

"frame-average",

"center-weighted",

"spot-metering"

};| Enumeration description | |

|---|---|

notavailable | The exposure mode is not known or not available on this source. |

auto | The exposure mode is automatically configured/adjusted at the source's discretion. |

frame-average | The light sensor should average of light information from entire scene. |

center-weighted | The light sensor should bias sensitivity concentrated toward center of viewfinder. |

spot-metering | The light sensor should only consider a centered spot area for exposure calculations. |

PhotoISOModeEnum enumeration

enum PhotoISOModeEnum {

"notavailable",

"auto",

"100",

"200",

"400",

"800",

"1250"

};| Enumeration description | |

|---|---|

notavailable | The ISO value is not known or not available on this source. |

auto | The ISO value is automatically selected/adjusted at the source's discretion. |

100 | An ASA rating of 100 |

200 | An ASA rating of 200 |

400 | An ASA rating of 400 |

800 | An ASA rating of 800 |

1250 | An ASA rating of 1250 |

This table summarizes the expected values of the video source state attributes for each of the sourceTypes defined earlier:

sourceType |

"none" | "microphone" | "readonly" | "remote" |

|---|---|---|---|---|

sourceType |

current SourceTypeEnum value |

current SourceTypeEnum value |

current SourceTypeEnum value |

current SourceTypeEnum value |

sourceId |

null | current DOMString value |

current DOMString value |

null |

volume |

null | current unsigned long value |

current unsigned long value |

current unsigned long value |

gain |

null | current float value |

current float value |

null |

partial interface AudioStreamTrack {

readonly attribute unsigned long? volume;

readonly attribute float? gain;

};volume of type unsigned long, readonly, nullablegain of type float, readonly, nullableIf the sourceType is a "microphone" or a "readonly" microphone,

and the source does not provide gain information, then this attribute must always return the value 1.0.

As the source adjusts its state (for any reason), applications may observer the related state changes. The following extensions to the MediaStreamTrack provide an alternative to polling the individual state attributes defined on the video and audio track-types.

The following event handler is added to the generic MediaStreamTrack interface.

partial interface MediaStreamTrack {

attribute EventHandler onstatechanged;

};onstatechanged of type EventHandlerThe user agent is encouraged to coalesce state changes into as few "statechanged" events as possible (when multiple state changes occur within a reasonably short amount of time to each other).

The "start" event described earlier is a convenience event because a "statechanged" event will also

be fired when the sourceType changes from "none" to something else. The "start" event

must fire before the "statechanged" event fires.

The following define the MediaStreamTrackStateEvent object and related initializer.

[Constructor(DOMString type, optional MediaStreamTrackStateEventInit eventInitDict)]

interface MediaStreamTrackStateEvent : Event {

readonly attribute DOMString[] states;

};states of type array of DOMString, readonlyThe initializer for the above-defined event type:

dictionary MediaStreamTrackStateEventInit : EventInit {

sequence<DOMString> states;

};MediaStreamTrackStateEventInit Membersstates of type sequence<DOMString>The following settings have been proposed, but are not included in this version to keep the initial set of settings scoped to those that:

Each setting also includes a brief explanatory rationale for why it's not included:

horizontalAspectRatio - easily calculated based on width/height in the dimension valuesverticalAspectRatio - see horizontalAspectRatio explanationorientation - can be easily calculated based on the width/height values and the current rotationaperatureSize - while more common on digital cameras, not particularly common on webcams (major use-case

for this feature)shutterSpeed - see aperatureSize explanationdenoise - may require specification of the algorithm processing or related image processing filter required

to implement.

effects - sounds like a v2 or independent feature (depending on the effect).faceDetection - sounds like a v2 feature. Can also be done using post-processing techniques (though

perhaps not as fast...)

antiShake - sounds like a v2 feature.geoTagging - this can be independently associated with a recorded photo/video/audio clip using the

Geolocation API. Automatically hooking up Geolocation to Media Capture sounds like an exercise for v2

given the possible complications.

highDynamicRange - not sure how this can be specified, or if this is just a v2 feature.skintoneEnhancement - not a particularly common setting.shutterSound - Can be accomplished by syncing custom audio playback via the <audio> tag if desired.

By default, there will be no sound issued.

redEyeReduction - photo-specific setting. (Could be considered if photo-specific settings

are introduced.)

sceneMode - while more common on digital cameras, not particularly common on webcams (major use-case

for this feature)antiFlicker - not a particularly common setting.zeroShutterLag - this seems more like a hope than a setting. I'd rather just have implementations

make the shutter snap as quickly as possible after takePhoto, rather than requiring an opt-in/opt-out

for this setting.

rotation - rotation can be provided at the sink level if desired (CSS transforms on a video element).mirror - mirroring can be provided at the sink level if desired (CSS transforms on a video element).bitRate - this is more directly relevant to peer connection transport objects than track-level information.The following settings may be included by working group decision:

This section describes APIs for retrieving the capabilities of a given source. The return value of these APIs is contingent on the track's sourceType value as summarized in the table below.

For each source state attribute defined (in the previous section), there is a corresponding capability associated with it. Capabilities are provided as either a min/max range, or a list of enumerated values but not both. Min/max capabilities are always provided for source state that are not enumerated types. Listed capabilities are always provided for source state corresponding to enumerated types.

sourceType |

"none" | "camera"/ "photo-camera"/ "microphone" | "readonly" | "remote" |

|---|---|---|---|---|

capabilities() |

null | (AllVideoCapabilities or AllAudioCapabilities) |

(AllVideoCapabilities or AllAudioCapabilities) |

null |

getCapability() |

null | (CapabilityRange or CapabilityList) | (CapabilityRange or CapabilityList) | null |

partial interface MediaStreamTrack {

(CapabilityRange or CapabilityList) getCapability (DOMString stateName);

(AllVideoCapabilities or AllAudioCapabilities) capabilities ();

};getCapabilityIf a capability is requested that does not have a corresponding state on the track-type, then a null value is returned (e.g.,

a VideoStreamTrack requests the "gain" capability. Since "gain" is not a state supported by video stream tracks,

this API will return null).

Given that implementations of various hardware may not exactly map to the same range, an implementation should make a reasonable attempt to

translate and scale the hardware's setting onto the mapping provided by this specification. If this is not possible due to the user agent's

inability to retrieve a given capapbility from a source, then for CapabilityRange-typed capabilities, the min and max

fields will not be present on the returned dictionary, and the supported field will be false. For CapabilityList-typed

capabilities, a suitable "notavailable" value will be the sole capability in the list.

An example of the user agent providing an alternative mapping: if a source supports a hypothetical fluxCapacitance state whose type is a CapabilityRange, and the state is defined in this specification to be the range from -10 (min) to 10 (max), but the source's (hardware setting) for fluxCapacitance only supports values of "off" "medium" and "full", then the user agent should map the range value of -10 to "off", 10 should map to "full", and 0 should map to "medium". Constraints imposing a strict value of 3 will cause the user agent to attempt to set the value of "medium" on the hardware, and return a fluxCapacitance state of 0, the closest supported setting. No error event is raised in this scenario.

CapabilityList objects should order their enumerated values from minimum to maximum where it makes sense, or in the order defined by the enumerated type where applicable.

See the AllVideoCapabilities and AllAudioCapabilities dictionary for details on the expected types for the various supported

state names.

| Parameter | Type | Nullable | Optional | Description |

|---|---|---|---|---|

| stateName | DOMString | ? | ? | The name of the source state for which the range of expected values should be returned. |

(CapabilityRange or CapabilityList)capabilitiesAllVideoCapabilities dictionary is returned. If the track type is AudioStreamTrack, the

AllAudioCapabilities dictionary is returned.

The dictionaries are populated as if each state were requested individually using getCapability(),

and the results of that API are assigned as the value of each stateName in the dictionary. Notably, the returned values

(AllVideoCapabilities or AllAudioCapabilities)CapabilityRange dictionary

dictionary CapabilityRange {

any max;

any min;

boolean supported;

};CapabilityRange Membersmax of type anyThe type of this value is specific to the capability as noted in the table for getCapability.

If the related capability is not supported by the source, then this field will not be provided by the

user agent (it will be undefined).

min of type anyThe type of this value is specific to the capability as noted in the table for getCapability.

If the related capability is not supported by the source, then this field will not be provided by the

user agent (it will be undefined).

supported of type booleantrue if the capability is supported, false otherwise.CapabilityList array

Capability Lists are just an array of supported DOMString values from the possible superset of

values described by each state's enumerated type.

typedef sequence<DOMString> CapabilityList;AllVideoCapabilities dictionary

dictionary AllVideoCapabilities {

CapabilityList? sourceType;

CapabilityList? sourceId;

CapabilityRange? width;

CapabilityRange? height;

CapabilityRange? frameRate;

CapabilityList? facingMode;

CapabilityRange? zoom;

CapabilityList? focusMode;

CapabilityList? fillLightMode;

CapabilityList? whiteBalanceMode;

CapabilityRange? brightness;

CapabilityRange? contrast;

CapabilityRange? saturation;

CapabilityRange? sharpness;

CapabilityRange? photoWidth;

CapabilityRange? photoHeight;

CapabilityList? exposureMode;

CapabilityList? isoMode;

};AllVideoCapabilities MemberssourceType of type CapabilityList, nullableSourceTypeEnum) on the current source.sourceId of type CapabilityList, nullablewidth of type CapabilityRange, nullableheight of type CapabilityRange, nullableframeRate of type CapabilityRange, nullablefacingMode of type CapabilityList, nullablezoom of type CapabilityRange, nullablefocusMode of type CapabilityList, nullablefillLightMode of type CapabilityList, nullablewhiteBalanceMode of type CapabilityList, nullablebrightness of type CapabilityRange, nullablecontrast of type CapabilityRange, nullablesaturation of type CapabilityRange, nullablesharpness of type CapabilityRange, nullablephotoWidth of type CapabilityRange, nullablephotoHeight of type CapabilityRange, nullableexposureMode of type CapabilityList, nullableisoMode of type CapabilityList, nullableAllAudioCapabilities dictionary

dictionary AllAudioCapabilities {

CapabilityList? sourceType;

CapabilityList? sourceId;

CapabilityRange? volume;

CapabilityRange? gain;

};AllAudioCapabilities MemberssourceType of type CapabilityList, nullableSourceTypeEnum) on the current source.sourceId of type CapabilityList, nullablevolume of type CapabilityRange, nullablegain of type CapabilityRange, nullableThis section contains an explanation of how constraint manipulation is expected to work with sources under various conditions. It also defines APIs for working with the set of applied constraints on a track. Finally, it defines a set of constraint names matching the previously-defined state attributes and capabilities.

Browsers provide a media pipeline from sources to sinks. In a browser, sinks are the <img>, <video> and <audio> tags. Traditional sources include camera, microphones, streamed content, files and web resources. The media produced by these sources typically does not change over time - these sources can be considered to be static.

The sinks that display these sources to the user (the actual tags themselves) have a variety of controls for manipulating the source content. For

example, an <img> tag scales down a huge source image of 1600x1200 pixels to fit in a rectangle defined with width="400" and

height="300".

The getUserMedia API adds dynamic sources such as microphones and cameras - the characteristics of these sources can change in response to application needs. These sources can be considered to be dynamic in nature. A <video> element that displays media from a dynamic source can either perform scaling or it can feed back information along the media pipeline and have the source produce content more suitable for display.

Note: This sort of feedback loop is obviously just enabling an "optimization", but it's a non-trivial gain. This optimization can save battery, allow for less network congestion, etc...

This proposal assumes that MediaStream sinks (such as <video>, <audio>,

and even RTCPeerConnection) will continue to have mechanisms to further transform the source stream beyond that

which the states, capabilities, and constraints described in this proposal offer. (The sink transformation options, including

those of RTCPeerConnection are outside the scope of this proposal.)

The act of changing or applying a track constraint may affect the state of all tracks sharing that source and consequently all down-level sinks

that are using that source. Many sinks may be able to take these changes in stride, such as the <video> element or RTCPeerConnection.

Others like the Recorder API may fail as a result of a source state change.

The RTCPeerConnection is an interesting object because it acts simultaneously as both a sink and a source for over-the-network

streams. As a sink, it has source transformational capabilities (e.g., lowering bit-rates, scaling-up or down resolutions, adjusting frame-rates), and as a

source it could have its own settings changed by a track source (though in this proposal sourceTypes of type "remote" do not consider

the current constraints applied to a track).

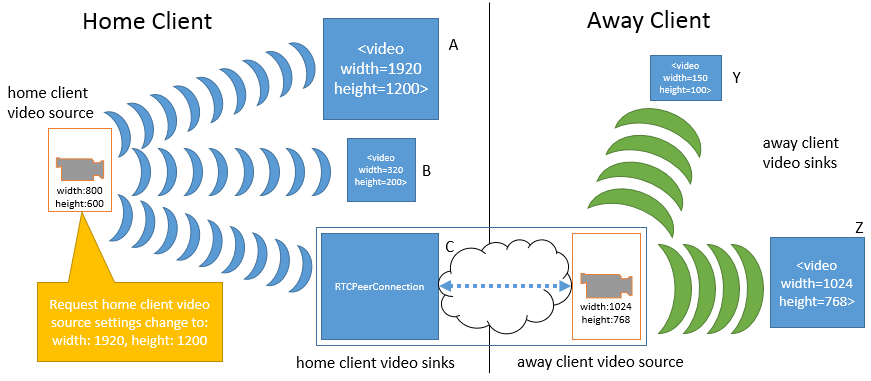

To illustrate how changes to a given source impact various sinks, consider the following example. This example only uses width and height, but the same

principles apply to any of the states exposed in this proposal. In the first figure a home client has obtained a video source

from its local video camera. The source's width and height state are 800 pixels by 600 pixels, respectively. Three MediaStream objects on the

home client contain tracks that use this same sourceId. The three media streams are connected to three different sinks, a <video> element (A),

another <video> element (B), and a peer connection (C). The peer connection is streaming the source video to an away client. On the away client

there are two media streams with tracks that use the peer connection as a source. These two media streams are connected to two <video> element

sinks (Y and Z).

Note that at this moment, all of the sinks on the home client must apply a transformation to the original source's provided state dimensions. A is scaling the video up (resulting in loss of quality), B is scaling the video down, and C is also scaling the video up slightly for sending over the network. On the away client, sink Y is scaling the video way down, while sink Z is not applying any scaling.

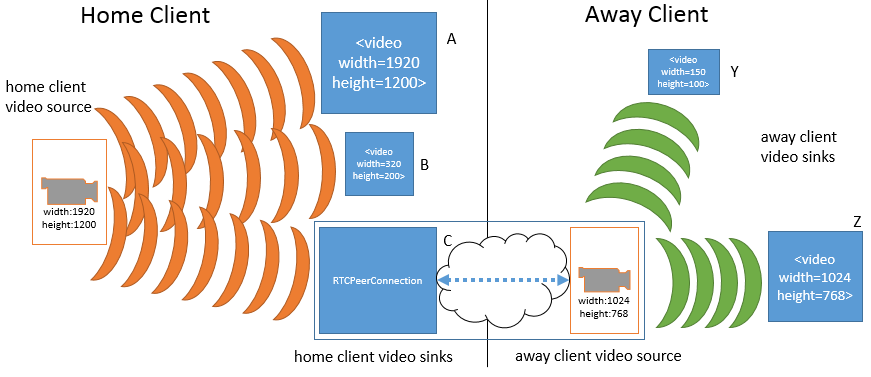

Using the constraint APIs defined in the next section, the home client's video source is changed to a higher resolution (1920 by 1200 pixels).

Note that the source change immediately effects all of the sinks on home client, but does not impact any of the sinks (or sources) on the away client. With the increase in the home client source video's dimensions, sink A no longer has to perform any scaling, while sink B must scale down even further than before. Sink C (the peer connection) must now scale down the video in order to keep the transmission constant to the away client.

While not shown, an equally valid settings change request could be made of the away client video source (the peer connection on the away client's side). This would not only impact sink Y and Z in the same manner as before, but would also cause re-negotiation with the peer connection on the home client in order to alter the transformation that it is applying to the home client's video source. Such a change would not change anything related to sink A or B or the home client's video source.

Note: This proposal does not define a mechanism by which a change to the away client's video source could automatically trigger a change to the home client's video source. Implementations may choose to make such source-to-sink optimizations as long as they only do so within the constraints established by the application, as the next example describes.

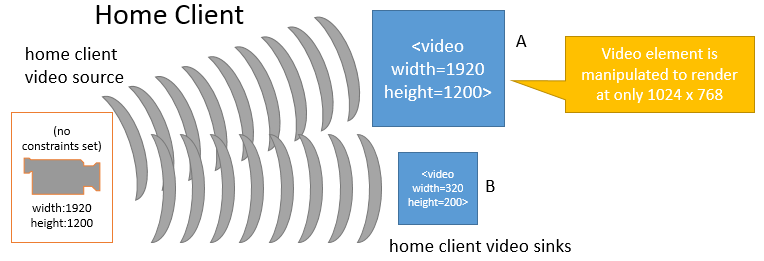

It is fairly obvious that changes to a given source will impact sink consumers. However, in some situations changes to a given sink may also be cause for

implementations to adjust the characteristics of a source's stream. This is illustrated in the following figures. In the first figure below, the home

client's video source is sending a video stream sized at 1920 by 1200 pixels. The video source is also unconstrained, such that the exact source dimensions

are flexible as far as the application is concerned. Two MediaStream objects contain tracks with the same sourceId, and those

MediaStreams are connected to two different <video> element sinks A and B. Sink A has been sized to width="1920" and

height="1200" and is displaying the source's video content without any transformations. Sink B has been sized smaller and as a result, is scaling the

video down to fit its rectangle of 320 pixels across by 200 pixels down.

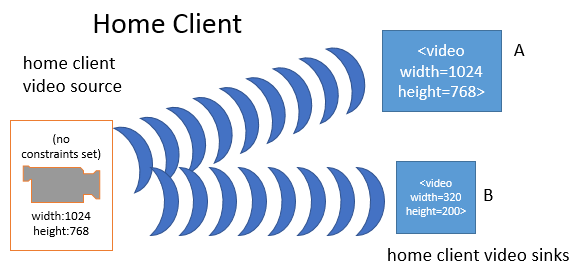

When the application changes sink A to a smaller dimension (from 1920 to 1024 pixels wide and from 1200 to 768 pixels tall), the browser's media pipeline may recognize that none of its sinks require the higher source resolution, and needless work is being done both on the part of the source and on sink A. In such a case and without any other constraints forcing the source to continue producing the higher resolution video, the media pipeline may change the source resolution:

In the above figure, the home client's video source resolution was changed to the max(sinkA, sinkB) in order to optimize playback. While not shown above, the same behavior could apply to peer connections and other sinks.

Constraints are independent of sources. However, depending on the sourceType the track's constraints may or may not actually be considered by the user agent. The following table summarizes the expectations around track constraints given a sourceType.

sourceType |

"none" | "camera"/ "photo-camera"/ "microphone" |

"readonly" | "remote" |

|---|---|---|---|---|

| Constraints apply to sourceType? | No | Yes | No | No

Issue 5 Issue: This may be too cut-and-dry. Maybe some of the constraints should apply? |

Whether MediaTrackConstraints were provided at track initialization time or need to be established later at runtime, the APIs defined below allow

the retrieval and manipulation of the constraints currently established on a track.

Each track maintains an internal version of the MediaTrackConstraints structure, namely a mandatory set of constraints (no duplicates),

and an optional ordered list of individual constraint objects (may contain duplicates). The internal stored constraint structure is only exposed

to the application using the existing MediaTrackConstraints, MediaTrackConstraintSet, MediaTrackConstraint,

and similarly-derived-type dictionary objects.

When track constraints change, a user agent must queue a task to evaluate those changes when the task queue is next serviced. Similarly, if the sourceType changes, then the user agent should perform the same actions to re-evaluate the constraints of each track affected by that source change.

partial interface MediaStreamTrack {

any getConstraint (DOMString constraintName, optional boolean mandatory = false);

void setConstraint (DOMString constraintName, any constraintValue, optional boolean mandatory = false);

MediaTrackConstraints? constraints ();

void applyConstraints (MediaTrackConstraints constraints);

void prependConstraint (DOMString constraintName, any constraintValue);

void appendConstraint (DOMString constraintName, any constraintValue);

attribute EventHandler onoverconstrained;

};onoverconstrained of type EventHandlerThis event may also fire when takePhoto is called and the source cannot record/encode an image due to over-constrained

or conflicting constraints of those uniquely related to sourceTypes of type "photo-camera".

Due to being over-constrained, the user agent must transition the source to the "armed" mode, which may

result in also dispatching one or more "muted" events to affected tracks.

The affected track(s) will remain un-usable (in the "muted" readyState) until the application adjusts the

constraints to accommodate the source's capabilities.

The "overconstrained" event is a simple event of type Event; it carries no information about which constraints

caused the source to be over-constrained (the application has all the necessary APIs to figure it out).

getConstraintRetrieves a specific named constraint value from the track. The named constraints are the same names used for the capabilities API, and also are the same names used for the source's state attributes.

Returns one of the following types:Each MediaTrackConstraint result in the list will contain a key which matches the requested constraintName parameter,

and whose value will either be a primitive value, or a MinMaxConstraint object.

The returned list will be ordered from most important-to-satisfy at index 0, to the least-important-to-satisfy

optional constraint.

Example: Given a track with an internal constraint structure:

{

mandatory: {

width: { min: 640 },

height: { min: 480 }

},

optional: [

{ width: 650 },

{ width: { min: 650, max: 800 }},

{ frameRate: 60 },

{ fillLightMode: "off" },

{ facingMode: "user" }

]

}

and a request for getConstraint("width"), the following list would be returned:

[

{ width: 650 },

{ width: { min: 650, max: 800 }}

]

MediaTrackConstraintSet associated

with this track, and the value of the constraint is a min/max range object.

MediaTrackConstraintSet associated

with this track, and the value of the constraint is a primitive value (DOMString, unsigned long, float, etc.).

| Parameter | Type | Nullable | Optional | Description |

|---|---|---|---|---|

| constraintName | DOMString | ? | ? | The name of the setting for which the current value of that setting should be returned |

| mandatory | boolean = false | ? | ? | true to indicate that the constraint should be looked up in the mandatory set of constraints,

otherwise, the constraintName should be retrieved from the optional list of constraints. |

anysetConstraintThis method updates the value of a same-named existing constraint (if found) in either the mandatory or optional list, and otherwise sets the new constraint.

This method searches the list of optional constraints from index 0 (highest priority) to the end of the list (lowest priority)

looking for matching constraints. Therefore, for multiple same-named optional constraints, this method will only update the

value of the highest-priority matching constraint.

If the mandatory flag is false and the constraint is not found in the list of optional constraints, then

a new optional constraint is created and appended to the end of the list (thus having lowest priority).

Note: This behavior allows applications to iteratively call setConstraint and have their

constraints added in the order specified in the source.

| Parameter | Type | Nullable | Optional | Description |

|---|---|---|---|---|

| constraintName | DOMString | ? | ? | The name of the constraint to set. |

| constraintValue | any | ? | ? | Either a primitive value (float/DOMString/etc), or a MinMaxConstraint dictionary. |

| mandatory | boolean = false | ? | ? | A flag indicating whether this constraint should be applied to the optional or mandatory constraints. |

voidconstraintsmandatory

field will not be present (it will be undefined). If no optional constraints have been defined, the optional field will not be

present (it will be undefined). If neither optional, nor mandatory constraints have been created, the value null is returned.

MediaTrackConstraints, nullableapplyConstraintsThis API will replace all existing constraints with the provided constraints (if existing constraints exist). Otherwise, it will apply the newly provided constraints to the track.

| Parameter | Type | Nullable | Optional | Description |

|---|---|---|---|---|

| constraints | MediaTrackConstraints | ? | ? | A new constraint structure to apply to this track. |

voidprependConstraintPrepends (inserts before the start of the list) the provided constraint name and value. This method does not consider whether a same-named constraint already exists in the optional constraints list.

This method applies exclusively to optional constraints; it does not modify mandatory constraints.

This method is a convenience API for programmatically building constraint structures.

| Parameter | Type | Nullable | Optional | Description |

|---|---|---|---|---|

| constraintName | DOMString | ? | ? | The name of the constraint to prepend to the list of optional constraints. |

| constraintValue | any | ? | ? | Either a primitive value (float/DOMString/etc), or a MinMaxConstraint dictionary. |

voidappendConstraintAppends (at the end of the list) the provided constraint name and value. This method does not consider whether a same-named constraint already exists in the optional constraints list.

This method applies exclusively to optional constraints; it does not modify mandatory constraints.

This method is a convenience API for programmatically building constraint structures.

| Parameter | Type | Nullable | Optional | Description |

|---|---|---|---|---|

| constraintName | DOMString | ? | ? | The name of the constraint to append to the list of optional constraints. |

| constraintValue | any | ? | ? | Either a primitive value (float/DOMString/etc), or a MinMaxConstraint dictionary. |

voidThe following JavaScript examples demonstrate how the Settings APIs defined in this proposal could be used.

var audioTrack = (AudioStreamTrack.getSourceIds().length > 0) ? new AudioStreamTrack() : null;

var videoTrack = (VideoStreamTrack.getSourceIds().length > 0) ? new VideoStreamTrack() : null;

if (audioTrack && videoTrack) {

videoTrack.onstarted = mediaStarted;

var MS = new MediaStream();

MS.addTrack(audioTrack);

MS.addTrack(videoTrack);

navigator.getUserMedia(MS);

}

function mediaStarted() {

// One of the video/audio devices started (assume both, but may not be strictly true if the user doesn't approve both tracks)

}

var lastUsedSourceId = localStorage["last-source-id"];

var lastUsedSourceIdAvailable = false;

VideoStreamTrack.getSourceIds().forEach(function (sourceId) { if (sourceId == lastUsedSourceId) lastUsedSourceIdAvailable = true; });

if (lastUsedSourceIdAvailable) {

// Request this specific source...

var vidTrack = new VideoStreamTrack( { mandatory: { sourceId: lastUsedSourceId }});

vidTrack.onoverconstrained = function() { alert("User, why didn't to give me access to the same source? I know you have it..."); }

navigator.getUserMedia(new MediaStream([vidTrack]));

}

else

alert("User could you plug back in that camera you were using on this page last time?");

function mediaStarted() {

// objectURL technique

document.querySelector("video").src = URL.createObjectURL(MS, { autoRevoke: true }); // autoRevoke is the default

// direct-assign technique

document.querySelector("video").srcObject = MS; // Proposed API at this time

}

function mediaStarted() {

videoTrack;

var maxWidth = videoTrack.getCapability("width").max;

var maxHeight = videoTrack.getCapability("height").max;

// Check for 1080p+ support

if ((maxWidth >= 1920) && (maxHeight >= 1080)) {

// See if I need to change the current settings...

if ((videoTrack.width < 1920) && (videoTrack.height < 1080)) {

videoTrack.setConstraint("width", maxWidth);

videoTrack.setConstraint("height", maxHeight);

videoTrack.onoverconstrained = failureToComply;

videoTrack.onstatechanged = didItWork;

}

}

else

failureToComply();

}

function failureToComply(e) {

if (e)

console.error("Devices failed to change " + e.settings); // 'width' and/or 'height'

else

console.error("Device doesn't support at least 1080p");

}

function didItWork(e) {

e.states.forEach( function (state) { if ((state == "width") || (state == "height")) alert("Resolution changed!"); });

}

function mediaStarted() {

setupRange( videoTrack );

}

function setupRange(videoTrack) {

var zoomCaps = videoTrack.getCapability("zoom");

// Check to see if the device supports zooming...

if (zoomCaps.supported) {

// Set HTML5 range control to min/max values of zoom

var zoomControl = document.querySelector("input[type=range]");

zoomControl.min = zoomCaps.min;

zoomControl.max = zoomCaps.max;

zoomControl.value = videoTrack.zoom;

zoomControl.onchange = applySettingChanges;

}

}

function applySettingChanges(e) {

videoTrack.setConstraint("zoom", parseFloat(e.target.value));

}

function mediaStarted() {

return new MediaStream( [ videoTrack, audioTrack ]);

}

function mediaStarted() {

// Check if this device supports a photo mode...

if (videoTrack.sourceType == "photo-camera") {

videoTrack.onphoto = showPicture;

// Turn on flash only for the snapshot...if available

if (videoTrack.fillLightMode != "notavailable")

videoTrack.setConstraint("fillLightMode", "flash");

else

console.info("Flash not available");

videoTrack.takePhoto();

}

}

function showPicture(e) {

var img = document.querySelector("img");

img.src = URL.createObjectURL(e.data);

}

A newly available device occurs when the user plugs in a device that wasn't previously visible to the user agent.

var lastSourceCount = VideoStreamTrack.getSourceIds().length;

setTimeout(function () {

if (lastSourceCount != VideoStreamTrack.getSourceIds().length)

alert("New device available! Do you want to use it?");

}, 1000 * 60); // Poll every minute

LocalMediaStream interfaceThis proposal recommends removing the derived LocalMediaStream interface. All relevant "local" information

has been moved to the track level, and anything else that offers a convenience API for working with all the set of tracks

on a MediaStream should just be added to the vanilla MediaStream interface itself.

See the previous proposals for a statement on the rationale behind this recommendation.

I'd like to specially thank Anant Narayanan of Mozilla for collaborating on the new settings design, and EKR for his 2c. Also, thanks to Martin Thomson (Microsoft) for his comments and review, and other participants on the public-media-capture mailing list.