This proposal simplifies the application of settings over the previous proposal and unifies the setting names

with the constraint names and syntax. This unification allows developers to use the same syntax when defining

constraints as well as with the settings APIs.

Reading the current settings are as simple as reading the readonly attribute of the same name. Each setting also has

a range of appropriate values (its capabilities), either enumerated values or a range continuum--these are the same ranges/enumerated

values that may be used when expressing constraints for the given setting. Retrieving the capabilities of a given setting

is done via a getRange API on each source object. Similarly, requesting a change to a setting is done via a

set API on each source object. Finally, for symmetry a get method is also defined which reports

the current value of any setting.

As noted in prior proposals, camera/microphone settings must be applied asynchronously to ensure that web

applications can remain responsive for all device types that may not respond quickly to setting changes.

This is especially true for settings communications over a peer connection.

4.1 Expectations around changing settings

Browsers provide a media pipeline from sources to sinks. In a browser, sinks are the <img>, <video> and <audio> tags. Traditional sources

include streamed content, files and web resources. The media produced by these sources typically does not change over time - these sources can be

considered to be static.

The sinks that display these sources to the user (the actual tags themselves) have a variety of controls for manipulating the source content. For

example, an <img> tag scales down a huge source image of 1600x1200 pixels to fit in a rectangle defined with width="400" and

height="300".

The getUserMedia API adds dynamic sources such as microphones and cameras - the characteristics of these sources can change in response to application

needs. These sources can be considered to be dynamic in nature. A <video> element that displays media from a dynamic source can either perform

scaling or it can feed back information along the media pipeline and have the source produce content more suitable for display.

Note

Note: This sort of feedback loop is obviously just enabling an "optimization", but it's a non-trivial gain. This

optimization can save battery, allow for less network congestion, etc...

This proposal assumes that MediaStream sinks (such as <video>, <audio>,

and even RTCPeerConnection) will continue to have mechanisms to further transform the source stream beyond that

which the settings described in this proposal offer. (The sink transformation options, including those of RTCPeerConnection

are outside the scope of this proposal.)

The act of changing a setting on a stream's source will, by definition, affect all down-level sinks that are using that source. Many sinks may be able

to take these changes in stride, such as the <video> element or RTCPeerConnection. Others like the Recorder API may fail

as a result of a source change.

The RTCPeerConnection is an interesting object because it acts simultaneously as both a sink and a source for over-the-network

streams. As a sink, it has source transformational capabilities (e.g., lowering bit-rates, scaling-up or down resolutions, adjusting frame-rates), and as a

source it may have its own settings changed by a track source that it provides (in this proposal, such sources are the VideoStreamRemoteSource and

AudioStreamRemoteSource objects).

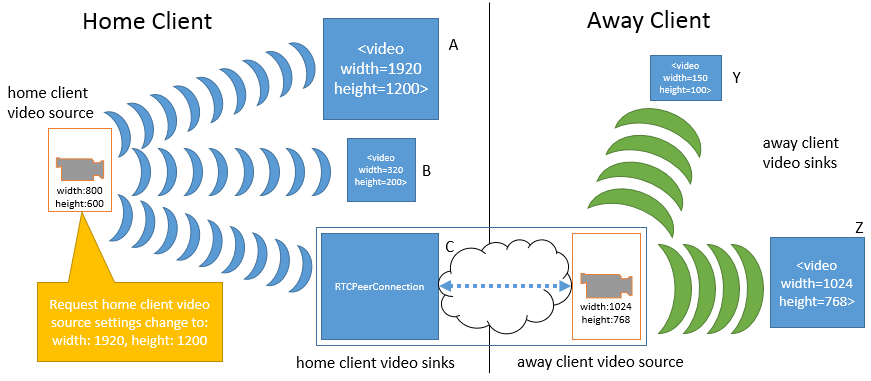

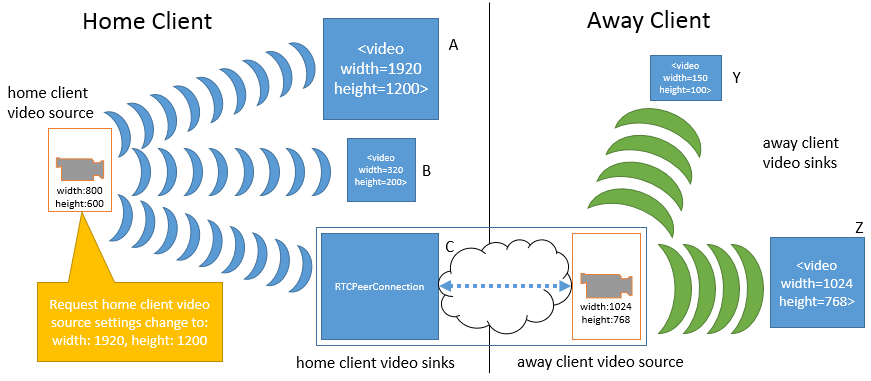

To illustrate how changes to a given source impact various sinks, consider the following example. This example only uses width and height, but the same

principles apply to any of the settings exposed in this proposal. In the first figure a home client has obtained a video source

from its local video camera. The source device's width and height are 800 pixels by 600 pixels, respectively. Three MediaStream objects on the

home client contain tracks that use this same source. The three media streams are connected to three different sinks, a <video> element (A),

another <video> element (B), and a peer connection (C). The peer connection is streaming the source video to an away client. On the away client

there are two media streams with tracks that use the peer connection as a source. These two media streams are connected to two <video> element

sinks (Y and Z).

Note that in the current state, all of the sinks on the home client must apply a transformation to the original source's dimensions. A is scaling the video up

(resulting in loss of quality), B is scaling the video down, and C is also scaling the video up slightly for sending over the network. On the away client, sink

Y is scaling the video way down, while sink Z is not applying any scaling.

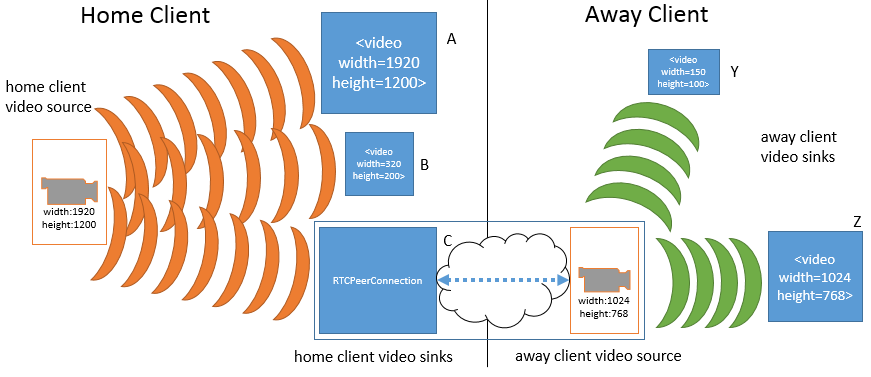

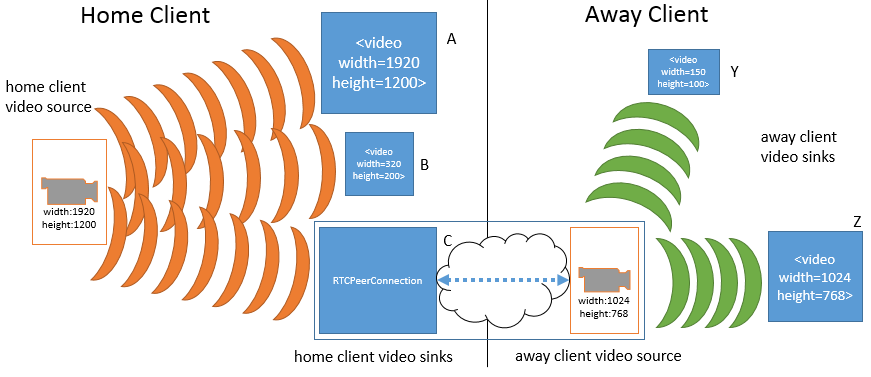

Using the settings APIs defined in the next section, the home client's video source is changed to a higher resolution (1920 by 1200 pixels).

Note that the source change immediately effects all of the sinks on home client, but does not impact any of the sinks (or sources) on the away client. With the

increase in the home client source video's dimensions, sink A no longer has to perform any scaling, while sink B must scale down even further than before.

Sink C (the peer connection) must now scale down the video in order to keep the transmission constant to the away client.

While not shown, an equally valid settings change request could be made of the away client video source (the peer connection on the away client's side).

This would not only impact sink Y and Z in the same manner as before, but would also cause re-negotiation with the peer connection on the home

client in order to alter the transformation that it is applying to the home client's video source. Such a change would not change anything

related to sink A or B or the home client's video source.

Note

Note: This proposal does not define a mechanism by which a change to the away client's video source could

automatically trigger a change to the home client's video source. Implementations may choose to make such source-to-sink optimizations as long as they only

do so within the constraints established by the application, as the next example describes.

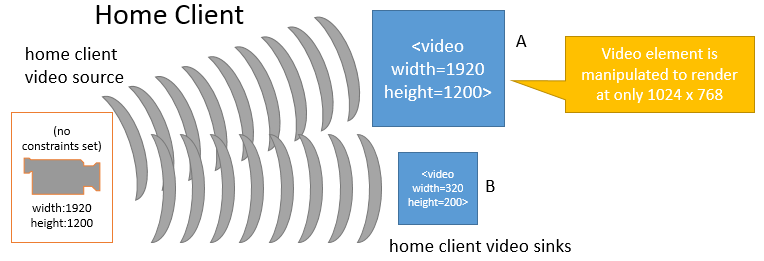

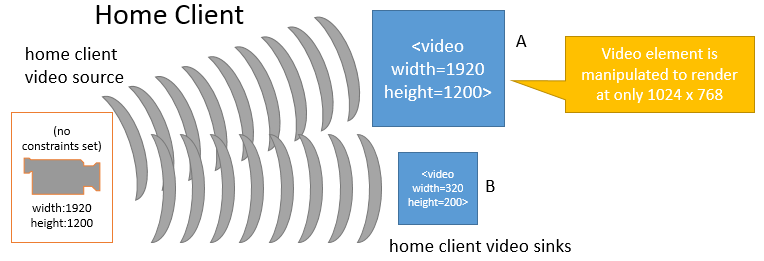

It is fairly obvious that changes to a given source will impact sink consumers. However, in some situations changes to a given sink may also be cause for

implementations to adjust the characteristics of a source's stream. This is illustrated in the following figures. In the first figure below, the home

client's video source is sending a video stream sized at 1920 by 1200 pixels. The video source is also unconstrained, such that the exact source dimensions

are flexible as far as the application is concerned. Two MediaStream objects contain tracks that use this same source, and those

MediaStreams are connected to two different <video> element sinks A and B. Sink A has been sized to width="1920" and

height="1200" and is displaying the sources video without any transformations. Sink B has been sized smaller and as a result, is scaling the

video down to fit its rectangle of 320 pixels across by 200 pixels down.

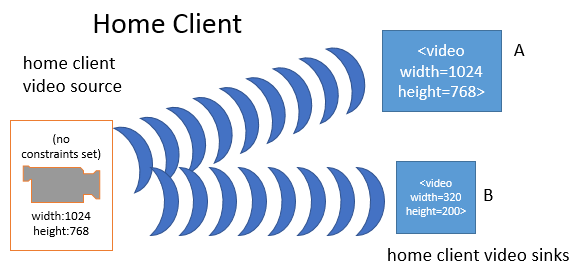

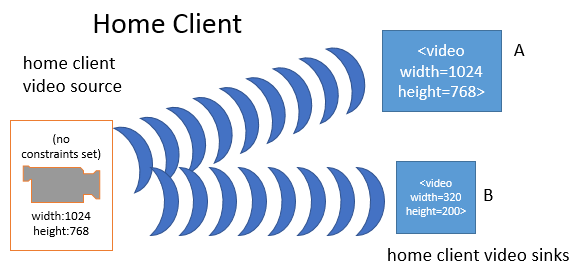

When the application changes sink A to a smaller dimension (from 1920 to 1024 pixels wide and from 1200 to 768 pixels tall), the browser's media pipeline may

recognize that none of its sinks require the higher source resolution, and needless work is being done both on the part of the source and on sink A. In

such a case and without any other constraints forcing the source to continue producing the higher resolution video, the media pipeline may change the source

resolution:

In the above figure, the home client's video source resolution was changed to the max(sinkA, sinkB) in order to optimize playback. While not shown above, the

same behavior could apply to peer connections and other sinks.