Abstract

This specification defines a JavaScript API to enable web developers to incorporate speech recognition and synthesis into their web pages.

It enables developers to use scripting to generate text-to-speech output and to use speech recognition as an input for forms, continuous dictation and control.

The JavaScript API allows web pages to control activation and timing and to handle results and alternatives.

Status of This Document

This specification was published by the Speech API Community Group. It is not a W3C Standard nor is it on the W3C Standards Track.

Please note that under the W3C Community Final Specification Agreement (FSA) other conditions apply.

Learn more about W3C Community and Business Groups.

All feedback is welcome.

Table of Contents

All diagrams, examples, and notes in this specification are non-normative, as are all sections explicitly marked non-normative.

Everything else in this specification is normative.

The key words "MUST", "MUST NOT", "REQUIRED", "SHOULD", "SHOULD NOT", "RECOMMENDED", "MAY", and "OPTIONAL" in the normative parts of this document are to be interpreted as described in RFC2119.

For readability, these words do not appear in all uppercase letters in this specification.

[RFC2119]

Requirements phrased in the imperative as part of algorithms (such as "strip any leading space characters" or "return false and abort these steps") are to be interpreted with the meaning of the key word ("must", "should", "may", etc) used in introducing the algorithm.

Conformance requirements phrased as algorithms or specific steps may be implemented in any manner, so long as the end result is equivalent.

(In particular, the algorithms defined in this specification are intended to be easy to follow, and not intended to be performant.)

User agents may impose implementation-specific limits on otherwise unconstrained inputs, e.g. to prevent denial of service attacks, to guard against running out of memory, or to work around platform-specific limitations.

Implementations that use ECMAScript to implement the APIs defined in this specification must implement them in a manner consistent with the ECMAScript Bindings defined in the Web IDL specification, as this specification uses that specification's terminology.

[WEBIDL]

2 Introduction

This section is non-normative.

The Web Speech API aims to enable web developers to provide, in a web browser, speech-input and text-to-speech output features that are typically not available when using standard speech-recognition or screen-reader software.

The API itself is agnostic of the underlying speech recognition and synthesis implementation and can support both server-based and client-based/embedded recognition and synthesis.

The API is designed to enable both brief (one-shot) speech input and continuous speech input.

Speech recognition results are provided to the web page as a list of hypotheses, along with other relevant information for each hypothesis.

This specification is a subset of the API defined in the HTML Speech Incubator Group Final Report [1].

That report is entirely informative since it is not a standards track document.

All portions of that report may be considered informative with regards to this document, and provide an informative background to this document.

This specification is a fully-functional subset of that report.

Specifically, this subset excludes the underlying transport protocol, the proposed additions to HTML markup, and it defines a simplified subset of the JavaScript API.

This subset supports the majority of use-cases and sample code in the Incubator Group Final Report.

This subset does not preclude future standardization of additions to the markup, API or underlying transport protocols, and indeed the Incubator Report defines a potential roadmap for such future work.

3 Use Cases

This section is non-normative.

This specification supports the following use cases, as defined in Section 4 of the Incubator Report.

- Voice Web Search

- Speech Command Interface

- Domain Specific Grammars Contingent on Earlier Inputs

- Continuous Recognition of Open Dialog

- Domain Specific Grammars Filling Multiple Input Fields

- Speech UI present when no visible UI need be present

- Voice Activity Detection

- Temporal Structure of Synthesis to Provide Visual Feedback

- Hello World

- Speech Translation

- Speech Enabled Email Client

- Dialog Systems

- Multimodal Interaction

- Speech Driving Directions

- Multimodal Video Game

- Multimodal Search

To keep the API to a minimum, this specification does not directly support the following use case.

This does not preclude adding support for this as a future API enhancement, and indeed the Incubator report provides a roadmap for doing so.

Note that for many usages and implementations, it is possible to avoid the need for Rerecognition by using a larger grammar, or by combining multiple grammars — both of these techniques are supported in this specification.

4 Security and privacy considerations

- User agents must only start speech input sessions with explicit, informed user consent.

User consent can include, for example:

- User click on a visible speech input element which has an obvious graphical representation showing that it will start speech input.

- Accepting a permission prompt shown as the result of a call to

SpeechRecognition.start.

- Consent previously granted to always allow speech input for this web page.

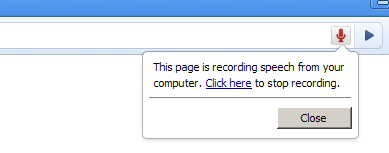

- User agents must give the user an obvious indication when audio is being recorded.

- In a graphical user agent, this could be a mandatory notification displayed by the user agent as part of its chrome and not accessible by the web page.

This could for example be a pulsating/blinking record icon as part of the browser chrome/address bar, an indication in the status bar, an audible notification, or anything else relevant and accessible to the user.

This UI element must also allow the user to stop recording.

- In a speech-only user agent, the indication may for example take the form of the system speaking the label of the speech input element, followed by a short beep.

- The user agent may also give the user a longer explanation the first time speech input is used, to let the user now what it is and how they can tune their privacy settings to disable speech recording if required.

- To minimize the chance of users unwittingly allowing web pages to record speech without their knowledge, implementations must abort an active speech input session if the web page lost input focus to another window or to another tab within the same user agent.

Implementation considerations

This section is non-normative.

- Spoken password inputs can be problematic from a security perspective, but it is up to the user to decide if they want to speak their password.

- Speech input could potentially be used to eavesdrop on users.

Malicious webpages could use tricks such as hiding the input element or otherwise making the user believe that it has stopped recording speech while continuing to do so.

They could also potentially style the input element to appear as something else and trick the user into clicking them.

An example of styling the file input element can be seen at http://www.quirksmode.org/dom/inputfile.html.

The above recommendations are intended to reduce this risk of such attacks.

5 API Description

This section is normative.

5.1 The SpeechRecognition Interface

The speech recognition interface is the scripted web API for controlling a given recognition.

The term "final result" indicates a SpeechRecognitionResult in which the final attribute is true.

The term "interim result" indicates a SpeechRecognitionResult in which the final attribute is false.

5.1.1 SpeechRecognition Attributes

- grammars attribute

- The grammars attribute stores the collection of SpeechGrammar objects which represent the grammars that are active for this recognition.

- lang attribute

- This attribute will set the language of the recognition for the request, using a valid BCP 47 language tag. [BCP47]

If unset it remains unset for getting in script, but will default to use the lang of the html document root element and associated hierachy.

This default value is computed and used when the input request opens a connection to the recognition service.

- continuous attribute

- When the continuous attribute is set to false, the user agent must return no more than one final result in response to starting recognition,

for example a single turn pattern of interaction.

When the continuous attribute is set to true, the user agent must return zero or more final results representing multiple consecutive recognitions in response to starting recognition,

for example a dictation.

The default value must be false. Note, this attribute setting does not affect interim results.

- interimResults attribute

- Controls whether interim results are returned.

When set to true, interim results should be returned.

When set to false, interim results must NOT be returned.

The default value must be false. Note, this attribute setting does not affect final results.

- maxAlternatives attribute

- This attribute will set the maximum number of SpeechRecognitionAlternatives per result.

The default value is 1.

- serviceURI attribute

- The serviceURI attribute specifies the location of the speech recognition service that the web application wishes to use.

If this attribute is unset at the time of the start method call, then the user agent must use the user agent default speech service.

Note that the serviceURI is a generic URI and can thus point to local services either through use of a URN with meaning to the user agent or by specifying a URL that the user agent recognizes as a local service.

Additionally, the user agent default can be local or remote and can incorporate end user choices via interfaces provided by the user agent such as browser configuration parameters.

[Editor note: The group is currently discussing whether WebRTC might be used to specify selection of audio sources and remote recognizers.] [5]

5.1.2 SpeechRecognition Methods

- start method

- When the start method is called it represents the moment in time the web application wishes to begin recognition.

When the speech input is streaming live through the input media stream, then this start call represents the moment in time that the service must begin to listen and try to match the grammars associated with this request.

Once the system is successfully listening to the recognition the user agent must raise a start event.

If the start method is called on an already started object (that is, start has previously been called, and no error or end event has fired on the object), the user agent must throw an InvalidStateError exception and ignore the call.

- stop method

- The stop method represents an instruction to the recognition service to stop listening to more audio, and to try and return a result using just the audio that it has already received for this recognition.

A typical use of the stop method might be for a web application where the end user is doing the end pointing, similar to a walkie-talkie.

The end user might press and hold the space bar to talk to the system and on the space down press the start call would have occurred and when the space bar is released the stop method is called to ensure that the system is no longer listening to the user.

Once the stop method is called the speech service must not collect additional audio and must not continue to listen to the user.

The speech service must attempt to return a recognition result (or a nomatch) based on the audio that it has already collected for this recognition.

If the stop method is called on an object which is already stopped or being stopped (that is, start was never called on it, the end or error event has fired on it, or stop was previously called on it), the user agent must ignore the call.

- abort method

- The abort method is a request to immediately stop listening and stop recognizing and do not return any information but that the system is done.

When the abort method is called, the speech service must stop recognizing.

The user agent must raise an end event once the speech service is no longer connected.

If the abort method is called on an object which is already stopped or aborting (that is, start was never called on it, the end or error event has fired on it, or abort was previously called on it), the user agent must ignore the call.

5.1.3 SpeechRecognition Events

The DOM Level 2 Event Model is used for speech recognition events.

The methods in the EventTarget interface should be used for registering event listeners.

The SpeechRecognition interface also contains convenience attributes for registering a single event handler for each event type.

The events do not bubble and are not cancelable.

For all these events, the timeStamp attribute defined in the DOM Level 2 Event interface must be set to the best possible estimate of when the real-world event which the event object represents occurred.

This timestamp must be represented in the user agent's view of time, even for events where the timestamps in question could be raised on a different machine like a remote recognition service (i.e., in a speechend event with a remote speech endpointer).

Unless specified below, the ordering of the different events is undefined.

For example, some implementations may fire audioend before speechstart or speechend if the audio detector is client-side and the speech detector is server-side.

- audiostart event

- Fired when the user agent has started to capture audio.

- soundstart event

- Fired when some sound, possibly speech, has been detected.

This must be fired with low latency, e.g. by using a client-side energy detector.

- speechstart event

- Fired when the speech that will be used for speech recognition has started.

- speechend event

- Fired when the speech that will be used for speech recognition has ended.

The speechstart event must always have been fire before speechend.

- soundend event

- Fired when some sound is no longer detected.

This must be fired with low latency, e.g. by using a client-side energy detector.

The soundstart event must always have been fired before soundend.

- audioend event

- Fired when the user agent has finished capturing audio.

The audiostart event must always have been fired before audioend.

- result event

- Fired when the speech recognizer returns a result.

The event must use the SpeechRecognitionEvent interface.

- nomatch event

- Fired when the speech recognizer returns a final result with no recognition hypothesis that meet or exceed the confidence threshold.

The event must use the SpeechRecognitionEvent interface.

The results attribute in the event may contain speech recognition results that are below the confidence threshold or may be null.

- error event

- Fired when a speech recognition error occurs.

The event must use the SpeechRecognitionError interface.

- start event

- Fired when the recognition service has begun to listen to the audio with the intention of recognizing.

- end event

- Fired when the service has disconnected.

The event must always be generated when the session ends no matter the reason for the end.

5.1.4 SpeechRecognitionError

The SpeechRecognitionError event is the interface used for the error event.

- error attribute

- The errorCode is an enumeration indicating what has gone wrong.

The values are:

- "no-speech"

- No speech was detected.

- "aborted"

- Speech input was aborted somehow, maybe by some user-agent-specific behavior such as UI that lets the user cancel speech input.

- "audio-capture"

- Audio capture failed.

- "network"

- Some network communication that was required to complete the recognition failed.

- "not-allowed"

- The user agent is not allowing any speech input to occur for reasons of security, privacy or user preference.

- "service-not-allowed"

- The user agent is not allowing the web application requested speech service, but would allow some speech service, to be used either because the user agent doesn't support the selected one or because of reasons of security, privacy or user preference.

- "bad-grammar"

- There was an error in the speech recognition grammar or semantic tags, or the grammar format or semantic tag format is unsupported.

- "language-not-supported"

- The language was not supported.

- message attribute

- The message content is implementation specific.

This attribute is primarily intended for debugging and developers should not use it directly in their application user interface.

5.1.5 SpeechRecognitionAlternative

The SpeechRecognitionAlternative represents a simple view of the response that gets used in a n-best list.

- transcript attribute

- The transcript string represents the raw words that the user spoke.

For continuous recognition, leading or trailing whitespace MUST be included where necessary such that concatenation of consecutive SpeechRecognitionResults produces a proper transcript of the session.

- confidence attribute

- The confidence represents a numeric estimate between 0 and 1 of how confident the recognition system is that the recognition is correct.

A higher number means the system is more confident.

[Editor note: The group is currently discussing whether confidence can be specified in a speech-recognition-engine-independent manner and whether confidence threshold and nomatch should be included, because this is not a dialog API.] [4]

5.1.6 SpeechRecognitionResult

The SpeechRecognitionResult object represents a single one-shot recognition match, either as one small part of a continuous recognition or as the complete return result of a non-continuous recognition.

- length attribute

- The long attribute represents how many n-best alternatives are represented in the item array.

- item getter

- The item getter returns a SpeechRecognitionAlternative from the index into an array of n-best values.

If index is greater than or equal to length, this returns null.

The user agent must ensure that the length attribute is set to the number of elements in the array.

The user agent must ensure that the n-best list is sorted in non-increasing confidence order (each element must be less than or equal to the confidence of the preceding elements).

- final attribute

- The final boolean must be set to true if this is the final time the speech service will return this particular index value.

If the value is false, then this represents an interim result that could still be changed.

5.1.7 SpeechRecognitionList

The SpeechRecognitionResultList object holds a sequence of recognition results representing the complete return result of a continuous recognition.

For a non-continuous recognition it will hold only a single value.

- length attribute

- The length attribute indicates how many results are represented in the item array.

- item getter

- The item getter returns a SpeechRecognitionResult from the index into an array of result values.

If index is greater than or equal to length, this returns null.

The user agent must ensure that the length attribute is set to the number of elements in the array.

5.1.8 SpeechRecognitionEvent

The SpeechRecognitionEvent is the event that is raised each time there are any changes to interim or final results.

- resultIndex attribute

- The resultIndex must be set to the lowest index in the "results" array that has changed.

- results attribute

- The array of all current recognition results for this session.

Specifically all final results that have been returned, followed by the current best hypothesis for all interim results.

It must consist of zero or more final results followed by zero or more interim results.

On subsequent SpeechRecognitionResultEvent events, interim results may be overwritten by a newer interim result or by a final result or may be removed (when at the end of the "results" array and the array length decreases).

Final results must not be overwritten or removed.

All entries for indexes less than resultIndex must be identical to the array that was present when the last SpeechRecognitionResultEvent was raised.

All array entries (if any) for indexes equal or greater than resultIndex that were present in the array when the last SpeechRecognitionResultEvent was raised are removed and overwritten with new results.

The length of the "results" array may increase or decrease, but must not be less than resultIndex.

Note that when resultIndex equals results.length, no new results are returned, this may occur when the array length decreases to remove one or more interim results.

- interpretation attribute

- The interpretation represents the semantic meaning from what the user said.

This might be determined, for instance, through the SISR specification of semantics in a grammar.

[Editor note: The group is currently discussing options for the value of the interpretation attribute when no interpretation has been returned by the recognizer.

Current options are 'null' or a copy of the transcript.] [2]

- emma attribute

- EMMA 1.0 representation of this result. [EMMA]

The contents of this result could vary across user agents and recognition engines, but all implementations must expose a valid XML document complete with EMMA namespace.

User agent implementations for recognizers that supply EMMA must contain all annotations and content generated by the recognition resources utilized for recognition, except where infeasible due to conflicting attributes.

The user agent may add additional annotations to provide a richer result for the developer.

5.1.9 SpeechGrammar

The SpeechGrammar object represents a container for a grammar.

[Editor note: The group is currently discussing options for which grammar formats should be supported, how builtin grammar types are specified, and default grammars when not specified.] [2] [3]

This structure has the following attributes:

- src attribute

- The required src attribute is the URI for the grammar.

Note some services may support builtin grammars that can be specified using a builtin URI scheme.

- weight attribute

- The optional weight attribute controls the weight that the speech recognition service should use with this grammar.

By default, a grammar has a weight of 1.

Larger weight values positively weight the grammar while smaller weight values make the grammar weighted less strongly.

5.1.10 SpeechGrammarList

The SpeechGrammarList object represents a collection of SpeechGrammar objects.

This structure has the following attributes:

- length attribute

- The length attribute represents how many grammars are currently in the array.

- item getter

- The item getter returns a SpeechGrammar from the index into an array of grammars.

The user agent must ensure that the length attribute is set to the number of elements in the array.

The user agent must ensure that the index order from smallest to largest matches the order in which grammars were added to the array.

- addFromURI method

- This method appends a grammar to the grammars array parameter based on URI.

The URI for the grammar is specified by the src parameter, which represents the URI for the grammar.

Note, some services may support builtin grammars that can be specified by URI.

If the weight parameter is present it represents this grammar's weight relative to the other grammar.

If the weight parameter is not present, the default value of 1.0 is used.

- addFromString method

- This method appends a grammar to the grammars array parameter based on text.

The content of the grammar is specified by the string parameter.

This content should be encoded into a data: URI when the SpeechGrammar object is created.

If the weight parameter is present it represents this grammar's weight relative to the other grammar.

If the weight parameter is not present, the default value of 1.0 is used.

5.2 The SpeechSynthesis Interface

The SpeechSynthesis interface is the scripted web API for controlling a text-to-speech output.

IDL

interface SpeechSynthesis {

readonly attribute boolean pending;

readonly attribute boolean speaking;

readonly attribute boolean paused;

void speak(SpeechSynthesisUtterance utterance);

void cancel();

void pause();

void resume();

SpeechSynthesisVoiceList getVoices();

};

[NoInterfaceObject]

interface SpeechSynthesisGetter

{

readonly attribute SpeechSynthesis speechSynthesis;

};

Window implements SpeechSynthesisGetter;

[Constructor,

Constructor(DOMString text)]

interface SpeechSynthesisUtterance : EventTarget {

attribute DOMString text;

attribute DOMString lang;

attribute DOMString voiceURI;

attribute float volume;

attribute float rate;

attribute float pitch;

attribute EventHandler onstart;

attribute EventHandler onend;

attribute EventHandler onerror;

attribute EventHandler onpause;

attribute EventHandler onresume;

attribute EventHandler onmark;

attribute EventHandler onboundary;

};

interface SpeechSynthesisEvent : Event {

readonly attribute unsigned long charIndex;

readonly attribute float elapsedTime;

readonly attribute DOMString name;

};

interface SpeechSynthesisVoice {

readonly attribute DOMString voiceURI;

readonly attribute DOMString name;

readonly attribute DOMString lang;

readonly attribute boolean localService;

readonly attribute boolean default;

};

interface SpeechSynthesisVoiceList {

readonly attribute unsigned long length;

getter SpeechSynthesisVoice item(in unsigned long index);

}

5.2.1 SpeechSynthesis Attributes

- pending attribute

- This attribute is true if the queue for the global SpeechSynthesis instance contains any utterances which have not started speaking.

- speaking attribute

- This attribute is true if an utterance is being spoken.

Specifically if an utterance has begun being spoken and has not completed being spoken.

This is independent of whether the global SpeechSynthesis instance is in the paused state.

- paused attribute

- This attribute is true when the global SpeechSynthesis instance is in the paused state.

This state is independent of whether anything is in the queue.

The default state of a the global SpeechSynthesis instance for a new window is the non-paused state.

5.2.2 SpeechSynthesis Methods

- speak method

- This method appends the SpeechSynthesisUtterance object to the end of the queue for the global SpeechSynthesis instance.

It does not change the paused state of the SpeechSynthesis instance.

If the SpeechSynthesis instance is paused, it remains paused.

If it is not paused and no other utterances are in the queue, then this utterance is spoken immediately,

else this utterance is queued to begin speaking after the other utterances in the queue have been spoken.

If changes are made to the SpeechSynthesisUtterance object after calling this method and prior to the corresponding end or error event,

it is not defined whether those changes will affect what is spoken, and those changes may cause an error to be returned.

- cancel method

- This method removes all utterances from the queue.

If an utterance is being spoken, speaking ceases immediately.

This method does not change the paused state of the global SpeechSynthesis instance.

- pause method

- This method puts the global SpeechSynthesis instance into the paused state.

If an utterance was being spoken, it pauses mid-utterance.

(If called when the SpeechSynthesis instance was already in the paused state, it does nothing.)

- resume method

- This method puts the global SpeechSynthesis instance into the non-paused state.

If an utterance was speaking, it continues speaking the utterance at the point at which it was paused, else it begins speaking the next utterance in the queue (if any).

(If called when the SpeechSynthesis instance was already in the non-paused state, it does nothing.)

- getVoices method

- This method returns the available voices.

It is user agent dependent which voices are available.

5.2.3 SpeechSynthesisUtterance Attributes

- text attribute

- This attribute specifies the text to be synthesized and spoken for this utterance.

This may be either plain text or a complete, well-formed SSML document. [SSML]

For speech synthesis engines that do not support SSML, or only support certain tags, the user agent or speech engine must strip away the tags they do not support and speak the text.

There may be a maximum length of the text, it may be limited to 32,767 characters.

- lang attribute

- This attribute specifies the language of the speech synthesis for the utterance, using a valid BCP 47 language tag. [BCP47]

If unset it remains unset for getting in script, but will default to use the lang of the html document root element and associated hierachy.

This default value is computed and used when the input request opens a connection to the recognition service.

- voiceURI attribute

- The voiceURI attribute specifies speech synthesis voice and the location of the speech synthesis service that the web application wishes to use.

If this attribute is unset at the time of the play method call, then the user agent must use the user agent default speech service.

Note that the voiceURI is a generic URI and can thus point to local services either through use of a URN with meaning to the user agent or by specifying a URL that the user agent recognizes as a local service.

Additionally, the user agent default can be local or remote and can incorporate end user choices via interfaces provided by the user agent such as browser configuration parameters.

- volume attribute

- This attribute specifies the speaking volume for the utterance.

It ranges between 0 and 1 inclusive, with 0 being the lowest volume and 1 the highest volume, with a default of 1.

If SSML is used, this value will be overridden by prosody tags in the markup.

- rate attribute

- This attribute specifies the speaking rate for the utterance.

It is relative to the default rate for this voice.

1 is the default rate supported by the speech synthesis engine or specific voice (which should correspond to a normal speaking rate).

2 is twice as fast, and 0.5 is half as fast.

Values below 0.1 or above 10 are strictly disallowed, but speech synthesis engines or specific voices may constrain the minimum and maximum rates further, for example, a particular voice may not actually speak faster than 3 times normal even if you specify a value larger than 3.

If SSML is used, this value will be overridden by prosody tags in the markup.

- pitch attribute

- This attribute specifies the speaking pitch for the utterance.

It ranges between 0 and 2 inclusive, with 0 being the lowest pitch and 2 the highest pitch.

1 corresponds to the default pitch of the speech synthesis engine or specific voice.

Speech synthesis engines or voices may constrain the minimum and maximum rates further.

If SSML is used, this value will be overridden by prosody tags in the markup.

5.2.4 SpeechSynthesisUtterance Events

Each of these events must use the SpeechSynthesisEvent interface.

- start event

- Fired when this utterance has begun to be spoken.

- end event

- Fired when this utterance has completed being spoken.

If this event fires, the error event must not be fired for this utterance.

- error event

- Fired if there was an error that prevented successful speaking of this utterance.

If this event fires, the end event must not be fired for this utterance.

- pause event

- Fired when and if this utterance is paused mid-utterance.

- resume event

- Fired when and if this utterance is resumed after being paused mid-utterance.

Adding the utterance to the queue while the global SpeechSynthesis instance is in the paused state, and then calling the resume method

does not cause the resume event to be fired, in this case the utterance's start event will be called when the utterance starts.

- mark event

- Fired when the spoken utterance reaches a named "mark" tag in SSML. [SSML]

The user agent must fire this event if the speech synthesis engine provides the event.

- boundary event

- Fired when the spoken utterance reaches a word or sentence boundary.

The user agent must fire this event if the speech synthesis engine provides the event.

5.2.5 SpeechSynthesisEvent Attributes

- charIndex attribute

- This attribute indicates the zero-based character index into the original utterance string that most closely approximates the current speaking position of the speech engine.

No guarantee is given as to where charIndex will be with respect to word boundaries (such as at the end of the previous word or the beginning of the next word), only that all text before charIndex has already been spoken, and all text after charIndex has not yet been spoken.

The user agent must return this value if the speech synthesis engine supports it, otherwise the user agent must return undefined.

- elapsedTime attribute

- This attribute indicates the time, in seconds, that this event triggered, relative to when this utterance has begun to be spoken.

The user agent must return this value if the speech synthesis engine supports it or the user agent can otherwise determine it, otherwise the user agent must return undefined.

- name attribute

- For mark events, this attribute indicates the name of the marker, as defined in SSML as the name attribute of a mark element. [SSML]

For boundary events, this attribute indicates the type of boundary that caused the event: "word" or "sentence".

For all other events, this value should return undefined.

5.2.6 SpeechSynthesisVoice

- voiceURI attribute

- The voiceURI attribute specifies the speech synthesis voice and the location of the speech synthesis service for this voice.

Note that the voiceURI is a generic URI and can thus point to local or remote services, as described in the SpeechSynthesisUtterance voiceURI attribute.

- name attribute

- This attribute is a human-readable name that represents the voice.

There is no guarantee that all names returned are unique.

- lang attribute

- This attribute is a BCP 47 language tag indicating the language of the voice. [BCP47]

- localService attribute

- This attribute is true for voices supplied by a local speech synthesizer, and is false for voices supplied by a remote speech synthesizer service.

(This may be useful because remote services may imply additional latency, bandwidth or cost, whereas local voices may imply lower quality, however there is no guarantee that any of these implications are true.)

- default attribute

- This attribute is true for at most one voice per language.

There may be a different default for each language.

It is user agent dependent how default voices are determined.

5.2.7 SpeechSynthesisVoiceList

The SpeechSynthesisVoiceList object holds a collection of SpeechSynthesisVoice objects. This structure has the following attributes.

- length attribute

- The length attribute indicates how many results are represented in the item array.

- item getter

- The item getter returns a SpeechSynthesisVoice from the index into an array of result values.

If index is greater than or equal to length, this returns null.

The user agent must ensure that the length attribute is set to the number of elements in the array.

6 Examples

This section is non-normative.

6.1 Speech Recognition Examples

Using speech recognition to fill an input-field and perform a web search.

Example 1

<script type="text/javascript">

var recognition = new SpeechRecognition();

recognition.onresult = function(event) {

if (event.results.length > 0) {

q.value = event.results[0][0].transcript;

q.form.submit();

}

}

</script>

<form action="http://www.example.com/search">

<input type="search" id="q" name="q" size=60>

<input type="button" value="Click to Speak" onclick="recognition.start()">

</form>

Using speech recognition to fill an options list with alternative speech results.

Example 2

<script type="text/javascript">

var recognition = new SpeechRecognition();

recognition.maxAlternatives = 10;

recognition.onresult = function(event) {

if (event.results.length > 0) {

var result = event.results[0];

for (var i = 0; i < result.length; ++i) {

var text = result[i].transcript;

select.options[i] = new Option(text, text);

}

}

}

function start() {

select.options.length = 0;

recognition.start();

}

</script>

<select id="select"></select>

<button onclick="start()">Click to Speak</button>

Using continuous speech recognition to fill a textarea.

Example 3

<textarea id="textarea" rows=10 cols=80></textarea>

<button id="button" onclick="toggleStartStop()"></button>

<script type="text/javascript">

var recognizing;

var recognition = new SpeechRecognition();

recognition.continuous = true;

reset();

recognition.onend = reset;

recognition.onresult = function (event) {

for (var i = resultIndex; i < event.results.length; ++i) {

if (event.results.final) {

textarea.value += event.results[i][0].transcript;

}

}

}

function reset() {

recognizing = false;

button.innerHTML = "Click to Speak";

}

function toggleStartStop() {

if (recognizing) {

recognition.stop();

reset();

} else {

recognition.start();

recognizing = true;

button.innerHTML = "Click to Stop";

}

}

</script>

Using continuous speech recognition, showing final results in black and interim results in grey.

Example 4

<button id="button" onclick="toggleStartStop()"></button>

<div style="border:dotted;padding:10px">

<span id="final_span"></span>

<span id="interim_span" style="color:grey"></span>

</div>

<script type="text/javascript">

var recognizing;

var recognition = new SpeechRecognition();

recognition.continuous = true;

recognition.interim = true;

reset();

recognition.onend = reset;

recognition.onresult = function (event) {

var final = "";

var interim = "";

for (var i = 0; i < event.results.length; ++i) {

if (event.results[i].final) {

final += event.results[i][0].transcript;

} else {

interim += event.results[i][0].transcript;

}

}

final_span.innerHTML = final;

interim_span.innerHTML = interim;

}

function reset() {

recognizing = false;

button.innerHTML = "Click to Speak";

}

function toggleStartStop() {

if (recognizing) {

recognition.stop();

reset();

} else {

recognition.start();

recognizing = true;

button.innerHTML = "Click to Stop";

final_span.innerHTML = "";

interim_span.innerHTML = "";

}

}

</script>

6.2 Speech Synthesis Examples

Spoken text.

Example 1

<script type="text/javascript">

speechSynthesis.speak(SpeechSynthesisUtterance('Hello World'));

</script>

Spoken text with attributes and events.

Example 2

<script type="text/javascript">

var u = new SpeechSynthesisUtterance();

u.text = 'Hello World';

u.lang = 'en-US';

u.rate = 1.2;

u.onend = function(event) { alert('Finished in ' + event.elapsedTime + ' seconds.'); }

speechSynthesis.speak(u);

</script>

Acknowledgments

Peter Beverloo, Google, Inc.

Bjorn Bringert, Google, Inc.

Gerardo Capiel, Benetech

Jerry Carter

Nagesh Kharidi, Openstream, Inc.

Dominic Mazzoni, Google, Inc.

Olli Pettay, Mozilla Foundation

Charles Pritchard

Satish Sampath, Google, Inc.

Adam Sobieski, Phoster, Inc.

Raj Tumuluri, Openstream, Inc.

Also, the members of the HTML Speech Incubator Group, and the corresponding Final Report [1], created the basis for this specification.

References

- [BCP47]

- Tags for Identifying Languages, A. Phillips, et al. September 2009.

Internet BCP 47.

URL: http://www.ietf.org/rfc/bcp/bcp47.txt

- [EMMA]

- EMMA: Extensible MultiModal Annotation markup language, Michael Johnston, Editor.

World Wide Web Consortium, 10 February 2009.

URL: http://www.w3.org/TR/emma/

- [RFC2119]

- Key words for use in RFCs to Indicate Requirement Levels, S. Bradner. March 1997.

Internet RFC 2119.

URL: http://www.ietf.org/rfc/rfc2119.txt

- [SSML]

- Speech Synthesis Markup Language (SSML), Daniel C. Burnett, et al., Editors.

World Wide Web Consortium, 7 September 2004.

URL: http://www.w3.org/TR/speech-synthesis/

- [WEBIDL]

- Web IDL, Cameron McCormack, Editor.

World Wide Web Consortium, 19 April 2012.

URL: http://www.w3.org/TR/WebIDL/

- [1]

- HTML Speech Incubator Group Final Report, Michael Bodell, et al., Editors. World Wide Web Consortium, 6 December 2011.

URL: http://www.w3.org/2005/Incubator/htmlspeech/XGR-htmlspeech/

- [2]

- SpeechRecognitionAlternative.interpretation when interpretation can't be provided thread on public-speech-api@w3.org, World Wide Web Consortium Speech API Community Group mailing list.

URL: http://lists.w3.org/Archives/Public/public-speech-api/2012Sep/0044.html

- [3]

- Default value of SpeechRecognition.grammars thread on public-speech-api@w3.org, World Wide Web Consortium Speech API Community Group mailing list.

URL: http://lists.w3.org/Archives/Public/public-speech-api/2012Jun/0179.html

- [4]

- Confidence property thread on public-speech-api@w3.org, World Wide Web Consortium Speech API Community Group mailing list.

URL: http://lists.w3.org/Archives/Public/public-speech-api/2012Jun/0143.html

- [5]

- Interacting with WebRTC, the Web Audio API and other external sources thread on public-speech-api@w3.org, World Wide Web Consortium Speech API Community Group mailing list.

URL: http://lists.w3.org/Archives/Public/public-speech-api/2012Sep/0072.html